I'm using boost 1.74.

So, without exception catches and rest stuff my actual code looks like:

typedef std::vector<int32_t> FlatINT32TArr;

using PreviewImageT = bg::rgba8_image_t;

using PreviewViewT = bg::rgba8_view_t;

using PreviewPixelT = bg::rgba8_pixel_t;

void read_pixel_arr(FlatINT32TArr r_preview_flat_arr, const std::wstring& filepath)

{

std::ifstream byte_stream(filepath, std::ios::binary);

PreviewImageT image;

bg::read_and_convert_image(

byte_stream, image, bg::image_read_settings<bg::png_tag>());

const int image_width = (int)image.width();

const int image_height = (int)image.height();

const int preview_pixel_count = image_width * image_height;

PreviewViewT preview_view;

PreviewPixelT* buff1 = new PreviewPixelT[preview_pixel_count];

preview_view = bg::interleaved_view(

image_width, image_height, buff1,

image_width * sizeof(PreviewPixelT));

bg::copy_and_convert_pixels(

bg::flipped_up_down_view(const_view(image)), preview_view);

r_preview_flat_arr = FlatINT32TArr(preview_pixel_count);

memcpy(

&r_preview_flat_arr[0],

&preview_view[0],

sizeof(PreviewPixelT) * preview_pixel_count

);

}

It reads the * .png image file and converts it to int32_t array. (The array is then used to generate the OpenGL texture).

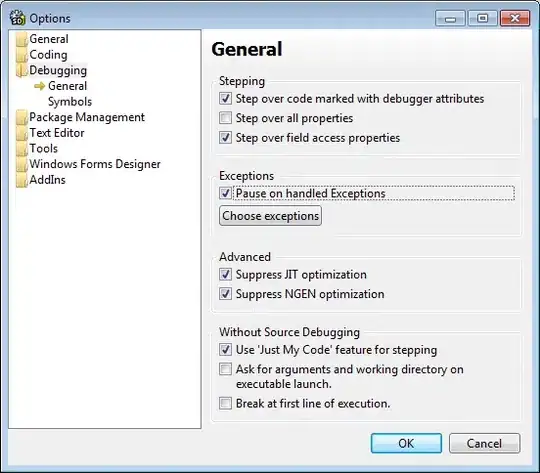

So, the original image file is:

It was exported from Adobe Illustrator with alpha channel, deinterlaced.

Here I got few issues I can't solve:

- Subsampling.

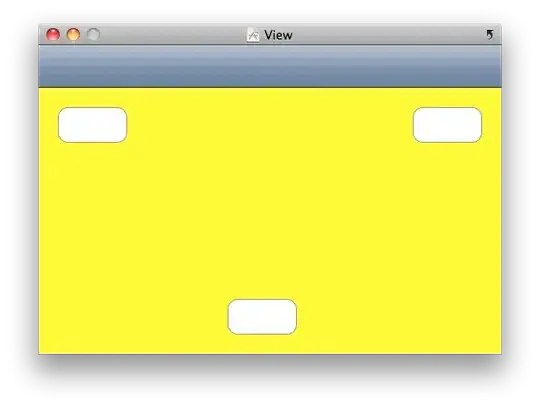

- here is result. As you can see, image is so much aliased as a stairs, why? How to solve it?

- here is result. As you can see, image is so much aliased as a stairs, why? How to solve it?

- Interlacing.

- here is result. As you can see, is there one more line from image bottom on the top. Temporary it was solved by exporting deinterlaced image but it is not the best way. How can I solve it using gil?

- here is result. As you can see, is there one more line from image bottom on the top. Temporary it was solved by exporting deinterlaced image but it is not the best way. How can I solve it using gil?

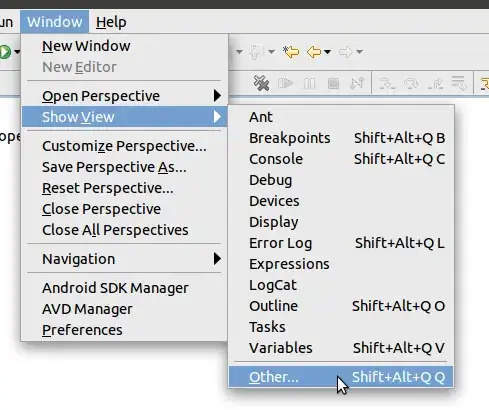

One more test: Original one (with alpha channel gradient):

Result: