I did a small benchmark test to compare Couchbase (running in Win) with Redis and MySql (EDIT: added Aerospike to test)

We are inserting 100 000 JSON "documents" into three db/stores:

- Redis (just insert, there is nothing else)

- Couchbase (in-memory Ephemeral buckets, JSON Index on JobId)

- MySql (Simple table; Id (int), Data (MediumText), index on Id)

- Aerospike (in-memory storage)

The JSON file is 67 lines, about 1800 bytes.

INSERT:

- Couchbase: 60-100 seconds (EDIT: seems to vary quite a bit!)

- MySql: 30 seconds

- Redis: 8 seconds

- Aerospike: 71 seconds

READ: We are reading 1000 times, and we do this 10 times and look at averages.

- Couchbase: 600-700 ms for 1000 GETs (Using KeyValue operations, not Query API. Using Query API, this takes about 1500 ms)

- MySql: 90-100 ms for 1000 GETs

- Redis: 50-60 ms for 1000 GETs

- Aerospike: 750 ms for 1000 GETs

Conclusion: Couchbase seems slowest (the INSERT times varies a lot it seems), Aerospike is also very slow. Both of these are using in-memory storage (Couchbase => Ephemeral bucket, Aerospike => storage-engine memory).

Question: Why the in-memory write and read on Couchbase so slow, even slower than using normal MySQL (on an SSD)?

CODE

Note: Using Task.WhenAll, or awaiting each call, doesn't make a difference.

INSERT

Couchbase:

IBucket bucket = await cluster.BucketAsync("halo"); // <-- ephemeral

IScope scope = bucket.Scope("myScope");

var collection = scope.Collection("myCollection");

// EDIT: Added this to avoid measuring lazy loading:

JObject t = JObject.FromObject(_baseJsonObject);

t["JobId"] = 0;

t["CustomerName"] = $"{firstnames[rand.Next(0, firstnames.Count - 1)]} {lastnames[rand.Next(0, lastnames.Count - 1)]}";

await collection.InsertAsync("0", t);

await collection.RemoveAsync("0");

List<Task> inserTasks = new List<Task>();

sw.Start();

foreach (JObject temp in jsonObjects) // jsonObjects is pre-created so its not a factor in the test

{

inserTasks.Add(collection.InsertAsync(temp.GetValue("JobId").ToString(), temp));

}

await Task.WhenAll(inserTasks);

sw.Stop();

Console.WriteLine($"Adding {nbr} to Couchbase took {sw.ElapsedMilliseconds} ms");

Redis (using ServiceStack!)

sw.Restart();

using (var client = redisManager.GetClient())

{

foreach (JObject temp in jsonObjects)

{

client.Set($"jobId:{temp.GetValue("JobId")}", temp.ToString());

}

}

sw.Stop();

Console.WriteLine($"Adding {nbr} to Redis took {sw.ElapsedMilliseconds} ms");

sw.Reset();

Mysql:

MySql.Data.MySqlClient.MySqlConnection mySqlConnection = new MySql.Data.MySqlClient.MySqlConnection("Server=localhost;Database=test;port=3306;User Id=root;password=root;");

mySqlConnection.Open();

sw.Restart();

foreach (JObject temp in jsonObjects)

{

MySql.Data.MySqlClient.MySqlCommand cmd = new MySql.Data.MySqlClient.MySqlCommand($"INSERT INTO test (id, data) VALUES ('{temp.GetValue("JobId")}', @data)", mySqlConnection);

cmd.Parameters.AddWithValue("@data", temp.ToString());

cmd.ExecuteNonQuery();

}

sw.Stop();

Console.WriteLine($"Adding {nbr} to MySql took {sw.ElapsedMilliseconds} ms");

sw.Reset();

READ

Couchbase:

IBucket bucket = await cluster.BucketAsync("halo");

IScope scope = bucket.Scope("myScope");

var collection = scope.Collection("myCollection");

Stopwatch sw = Stopwatch.StartNew();

for (int i = 0; i < 1000; i++)

{

string key = $"{r.Next(1, 100000)}";

var result = await collection.GetAsync(key);

}

sw.Stop();

Console.WriteLine($"Couchbase Q: {q}\t{sw.ElapsedMilliseconds}");

Redis:

Stopwatch sw = Stopwatch.StartNew();

using (var client = redisManager.GetClient())

{

for (int i = 0; i < nbr; i++)

{

client.Get<string>($"jobId:{r.Next(1, 100000)}");

}

}

sw.Stop();

Console.WriteLine($"Redis Q: {q}\t{sw.ElapsedMilliseconds}");

MySQL:

MySqlConnection mySqlConnection = new MySql.Data.MySqlClient.MySqlConnection("Server=localhost;Database=test;port=3306;User Id=root;password=root;");

mySqlConnection.Open();

Stopwatch sw = Stopwatch.StartNew();

for (int i = 0; i < nbr; i++)

{

MySqlCommand cmd = new MySql.Data.MySqlClient.MySqlCommand($"SELECT data FROM test WHERE Id='{r.Next(1, 100000)}'", mySqlConnection);

using MySqlDataReader rdr = cmd.ExecuteReader();

while (rdr.Read())

{

}

}

sw.Stop();

Console.WriteLine($"MySql Q: {q} \t{sw.ElapsedMilliseconds} ms");

sw.Reset();

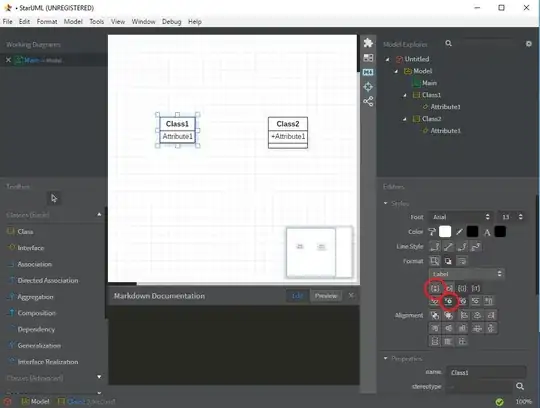

Couchbase setup:

and

and Bucket Durability:

I only have 1 Node (no cluster), it's local on my machine, running Ryzen 3900x 12 cores, M.2 SSD, Win10, 32 GB RAM.

If you made it this far, here is a GitHub repo with my benchmark code: https://github.com/tedekeroth/CouchbaseTests