A question for the group- if we roll out with a new product feature (let's say a new filter on an app) where on a pre-post basis we see a dip in conversion but after 2 months we decide to actually test the feature and the results come out as that the existing variant (before the pre-post) actually did worse than rollout- how much of this have you experienced in your testing? The question to sum up is how often have you observed users getting used to a new feature (even if suboptimal) and how do you quantify the effect of that?

1 Answers

Stable residual lift sans novelty effects are hard to quantify precisely because of what you described. Habit forms over time and in UI paradigms, there's inertia to deal with as well. One way to approach this is to create long-term holdouts and then compare the effects of the held-out population from the rest. That said I would recommend keeping these holdouts tiny, and against maintaining such holdouts for longer than necessary.

There is also backtests which turn-off features after they are released. Definitely a poor experience for users, but if you absolutely must, you can use that to measure the impact of your existing features.

In all these cases, I'd recommend using a tool to help with these measurements - something like Statsig could help.

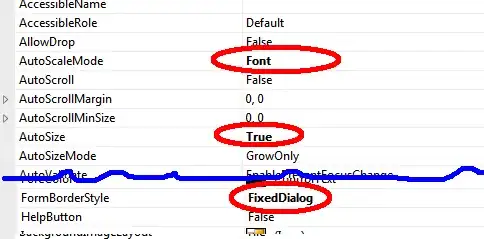

In the picture below, the people that were held out are performing poorly on multiple metrics, and that means the features that are being held out have strong positive impact.

Disclaimer: I work at Statsig

- 509

- 2

- 6