Overview

I am following a tutorial (see below) to find the best fit models from bagged trees, random forests, boosted trees, and general linear models.

Tutorial (see examples below)

https://bcullen.rbind.io/post/2020-06-02-tidymodels-decision-tree-learning-in-r/

Issue

In this case, I would like to explore the data further and visualise the most important predictors (see diagram below) for my data in the random forest model.

My data frame is called FID and the predictors in the random forest model involve:

- Year (numeric)

- Month (Factor)

- Days (numeric)

The dependent variable is Frequency (numeric)

When I try to run the plot to visualise the most important predictor, I keep on getting this error message:-

Error: Problem with `mutate()` input `oob_rmse`.

x non-numeric argument to mathematical function

ℹ Input `oob_rmse` is `map_dbl(fit, ~sqrt(.x$prediction.error))`.

Run `rlang::last_error()` to see where the error occurred.

Called from: signal_abort(cnd)

If anyone has any advice on how to fix the error message, I would be deeply appreciative.

Many thanks in advance

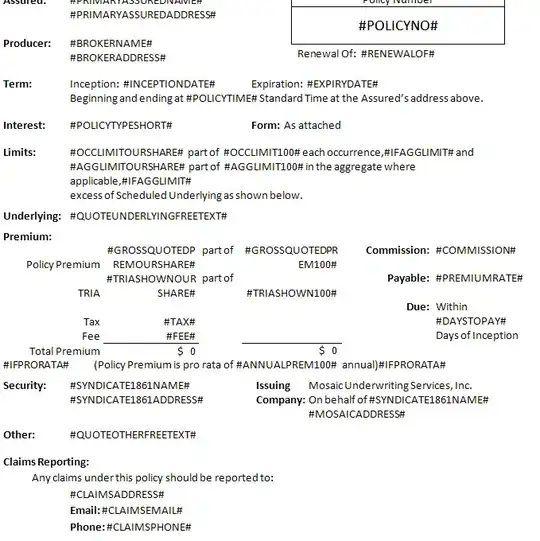

Examples of how to produce the plot from the R-code in the tutorial

Visualise the model

Plot to show the most important predictors from the R-code in the tutorial

My R-code

##Open libraries

library(tidymodels)

library(parsnip)

library(forcats)

library(ranger)

library(baguette)

###########################################################

#split this single dataset into two: a training set and a testing set

data_split <- initial_split(FID)

# Create data frames for the two sets:

train_data <- training(data_split)

test_data <- testing(data_split)

# resample the data with 10-fold cross-validation (10-fold by default)

cv <- vfold_cv(train_data, v=3)

###########################################################

##Produce the recipe

rec <- recipe(Frequency ~ ., data = FID) %>%

step_nzv(all_predictors(), freq_cut = 0, unique_cut = 0) %>% # remove variables with zero variances

step_novel(all_nominal()) %>% # prepares test data to handle previously unseen factor levels

step_medianimpute(all_numeric(), -all_outcomes(), -has_role("id vars")) %>% # replaces missing numeric observations with the median

step_dummy(all_nominal(), -has_role("id vars")) # dummy codes categorical variables

###################################################################################

###################################################

##Random forests

###################################################

mod_rf <-rand_forest(trees = 1e3) %>%

set_engine("ranger",

num.threads = parallel::detectCores(),

importance = "permutation",

verbose = TRUE) %>%

set_mode("regression")

##Create Workflow

wflow_rf <- workflow() %>%

add_model(mod_rf) %>%

add_recipe(rec)

##Fit the model

plan(multisession)

fit_rf<-fit_resamples(

wflow_rf,

cv,

metrics = metric_set(rmse, rsq),

control = control_resamples(save_pred = TRUE,

extract = function(x) extract_model(x)))

# extract roots

rf_tree_roots <- function(x){

map_chr(1:1000,

~ranger::treeInfo(x, tree = .)[1, "splitvarName"])

}

rf_roots <- function(x){

x %>%

dplyr::select(.extracts) %>%

unnest(cols = c(.extracts)) %>%

dplyr::mutate(fit = map(.extracts,

~.x$fit$fit$fit),

oob_rmse = map_dbl(fit,

~sqrt(.x$prediction.error)),

roots = map(fit,

~rf_tree_roots(.))

) %>%

dplyr::select(roots) %>%

unnest(cols = c(roots))

}

##Open a plotting window

dev.new()

# plot

rf_roots(fit_rf) %>%

group_by(roots) %>%

count() %>%

dplyr::arrange(desc(n)) %>%

dplyr::filter(n > 75) %>%

ggplot(aes(fct_reorder(roots, n), n)) +

geom_col() +

coord_flip() +

labs(x = "root", y = "count")

##Error message

Error: Problem with `mutate()` input `oob_rmse`.

x non-numeric argument to mathematical function

ℹ Input `oob_rmse` is `map_dbl(fit, ~sqrt(.x$prediction.error))`.

Run `rlang::last_error()` to see where the error occurred.

Called from: signal_abort(cnd)

Data frame - FID

structure(list(Year = c(2015, 2015, 2015, 2015, 2015, 2015, 2015,

2015, 2015, 2015, 2015, 2015, 2016, 2016, 2016, 2016, 2016, 2016,

2016, 2016, 2016, 2016, 2016, 2016, 2017, 2017, 2017, 2017, 2017,

2017, 2017, 2017, 2017, 2017, 2017, 2017), Month = structure(c(1L,

2L, 3L, 4L, 5L, 6L, 7L, 8L, 9L, 10L, 11L, 12L, 1L, 2L, 3L, 4L,

5L, 6L, 7L, 8L, 9L, 10L, 11L, 12L, 1L, 2L, 3L, 4L, 5L, 6L, 7L,

8L, 9L, 10L, 11L, 12L), .Label = c("January", "February", "March",

"April", "May", "June", "July", "August", "September", "October",

"November", "December"), class = "factor"), Frequency = c(36,

28, 39, 46, 5, 0, 0, 22, 10, 15, 8, 33, 33, 29, 31, 23, 8, 9,

7, 40, 41, 41, 30, 30, 44, 37, 41, 42, 20, 0, 7, 27, 35, 27,

43, 38), Days = c(31, 28, 31, 30, 6, 0, 0, 29, 15,

29, 29, 31, 31, 29, 30, 30, 7, 0, 7, 30, 30, 31, 30, 27, 31,

28, 30, 30, 21, 0, 7, 26, 29, 27, 29, 29)), row.names = c(NA,

-36L), class = "data.frame")