I have a dataset with y.size and x.number. I am trying to compare the AIC value for a linear regression estimation of the model and a custom model. I was able to successfully run the estimates for the linear regression. The custom model estimation produces this error "Error in optim(start, f, method = method, hessian = TRUE, ...) : non-finite finite-difference value [2]" I am a newbie to ML models, so any help would be appreciated.

y.size <- c(2.69,4.1,8.04,3.1,5.27,5.033333333,3.2,7.25,6.29,4.55,6.1,2.65,3.145,3.775,3.46,5.73,5.31,4.425,3.725,4.32,5,3.09,5.25,5.65,3.48,6.1,10,9.666666667,6.06,5.9,2.665,4.32,3.816666667,3.69,5.8,5,3.72,3.045,4.485,3.642857143,5.5,6.333333333,4.75,6,7.466666667,5.03,5.23,4.85,5.59,5.96,5.33,4.92,4.255555556,6.346666667,4.13,6.33,4,7.35,6.35,4.63,5.13,7.4,4.28,4.233333333,4.3125,6.18,4.3,4.47,4.88,4.5,2.96,2.1,3.7,3.62,5.42,3.8,5.5,3.27,3.36,3.266666667,2.265,3.1,2.51,2.51,4.4,2.64,4.38,4.53,2.29,2.87,3.395,3.26,2.77,3.22,4.31,4.73,4.05,3.48,4.8,4.7,3.05,4.21,5.95,4.39,4.55,4.27,4.955,4.65,3.32,3.48,3.828571429,4.69,4.68,3.76,3.91,4,4.41,4.19,4.733333333,4.32,2.83,3.41,4.42,3.47,3.84,4.39)

x.number <- c(69,62,8,80,13,12,2,22,19,49,840,44,31,56,33,58,91,8,15,86,11,69,12,24,32,27,1,4,26,4,28,33,1516,41,20,58,44,29,58,14,3,3,6,3,26,52,26,29,92,30,18,11,27,19,38,78,57,52,17,45,56,7,37,7,14,13,164,76,82,14,273,122,662,434,126,374,1017,522,374,602,164,5,191,243,134,70,23,130,306,516,414,236,172,164,92,53,50,17,22,27,92,48,30,55,28,296,35,12,350,17,22,53,97,62,92,272,242,170,37,220,452,270,392,314,150,232)

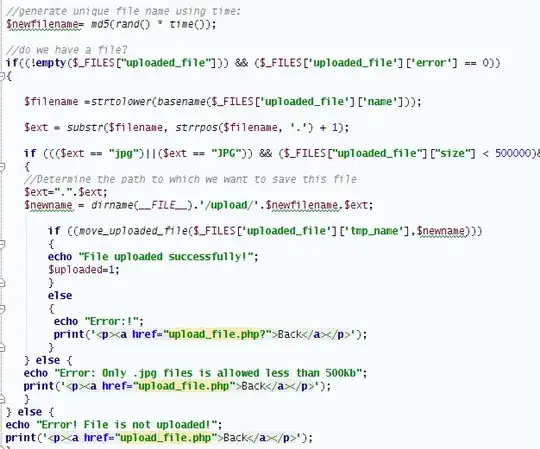

require(bbmle)

linreg <- function(a, b, sigma){

y.pred <- a + b * x.number

-sum(dnorm(y.size, mean = y.pred, sd = sigma, log=TRUE ))

}

mle2.linreg.model <- mle(linreg, start = list(a = 5, b = -0.01 , sigma = 1))

summary(mle2.linreg.model)

-logLik(mle2.linreg.model)

AIC(mle2.linreg.model)

skewfun <- function(aa,bb, Tmin, Tmax, sigma){

y.pred <- aa * x.number * (x.number - Tmin) * ((Tmax - x.number )^(1/bb)) + 2

-sum(dnorm(y.size, mean = y.pred, sd = sigma, log=TRUE ))

}

mle2.skewfun.model <- mle(skewfun, start = list(aa = 4/10^27, bb = 1/10 , Tmin = 2 , Tmax = 300, sigma = 0.1))

EDIT: After trying the initial answer provided by Simon Woodward, I tried the right skew function with new parameter estimates but I got a similar Error: Error in solve.default(oout$hessian) : Lapack routine dgesv: system is exactly singular: U[2,2] = 0. I custom fit the function to get an idea on how the curve should look for this dummy data. I used these parameters to run the ML when I ran into the error. Here is how the raw data + curve looks like. Below is the code used to generate it

y.size <- c(2.69,4.1,8.04,3.1,5.27,5.033333333,3.2,7.25,6.29,4.55,6.1,2.65,3.145,3.775,3.46,5.73,5.31,4.425,3.725,4.32,5,3.09,5.25,5.65,3.48,6.1,10,9.666666667,6.06,5.9,2.665,4.32,3.816666667,3.69,5.8,5,3.72,3.045,4.485,3.642857143,5.5,6.333333333,4.75,6,7.466666667,5.03,5.23,4.85,5.59,5.96,5.33,4.92,4.255555556,6.346666667,4.13,6.33,4,7.35,6.35,4.63,5.13,7.4,4.28,4.233333333,4.3125,6.18,4.3,4.47,4.88,4.5,2.96,2.1,3.7,3.62,5.42,3.8,5.5,3.27,3.36,3.266666667,2.265,3.1,2.51,2.51,4.4,2.64,4.38,4.53,2.29,2.87,3.395,3.26,2.77,3.22,4.31,4.73,4.05,3.48,4.8,4.7,3.05,4.21,5.95,4.39,4.55,4.27,4.955,4.65,3.32,3.48,3.828571429,4.69,4.68,3.76,3.91,4,4.41,4.19,4.733333333,4.32,2.83,3.41,4.42,3.47,3.84,4.39)

x.number <- c(69,62,8,80,13,12,2,22,19,49,840,44,31,56,33,58,91,8,15,86,11,69,12,24,32,27,1,4,26,4,28,33,1516,41,20,58,44,29,58,14,3,3,6,3,26,52,26,29,92,30,18,11,27,19,38,78,57,52,17,45,56,7,37,7,14,13,164,76,82,14,273,122,662,434,126,374,1017,522,374,602,164,5,191,243,134,70,23,130,306,516,414,236,172,164,92,53,50,17,22,27,92,48,30,55,28,296,35,12,350,17,22,53,97,62,92,272,242,170,37,220,452,270,392,314,150,232)

df <- data.frame(x.number, y.size)

df <- df[df$x.number < 750,]

aa <- 1.25/10^88

bb <- 1/30

Tdata <- df$x.number

Tmin <- 1

Tmax <- 750

y.pred <- (aa * df$x.number * (df$x.number - Tmin) * abs(Tmax - df$x.number) ^ (1/bb)) + 3

raw.data <- df$y.size

min = Tdata - Tmin

max = Tmax - Tdata

df1 <- data.frame(df$x.number, min, max, y.pred, raw.data)

library(tidyr)

df.long <- gather(df1, data.type, data.measurement, y.pred:raw.data)

#ggplot(aes(x = Tdata , y = raw.data), data = df1) + geom_point()

ggplot(aes(x = Tdata , y = y.pred), data = df1) + geom_point()

library(ggplot2)

ggplot(aes(x = df.x.number , y = data.measurement, color = data.type), data = df.long) + geom_point()