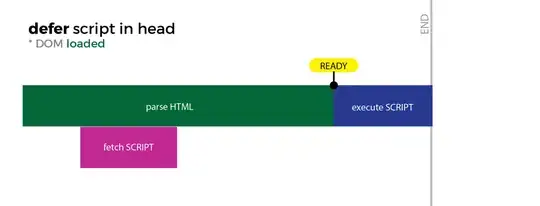

I am building a time series model for future forecasting which consists of 2 BILSTM layers followed by a dense layer. I have a total of 120 products to forecast their values. And I have a relatively small dataset (Monthly data for a period of 2 years => maximum 24-time steps). Consequently, if I look into the overall validation accuracy, I got this:

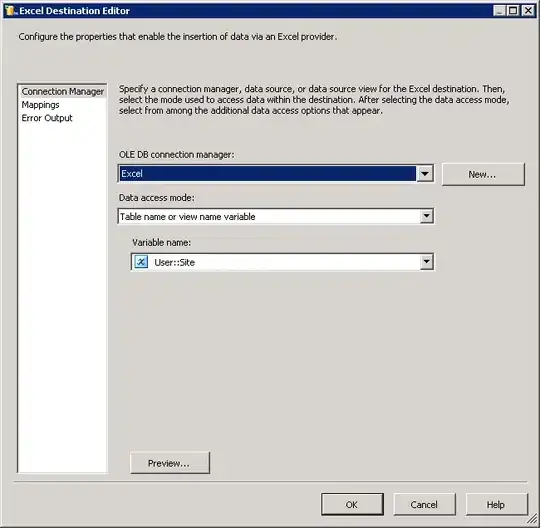

At every epoch, I saved the model weights into memory so that I load any model anytime in the future.

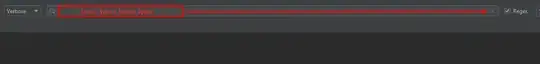

When I look into the validation accuracy of different products, I got the following (This is roughly for a few products):

For this product, can I use the model saved at epoch ~90 to forecast for this model?

And the following product, can I use the saved model at epoch ~40 for forecasting?

Am I cheating? Please note that products are quite diverse and their purchasing behavior differs from one to another. To me, following this strategy, is equivalent to training 120 models (given 120 products), while at the same time, feeding more data per model as a bonus, hoping to achieve better per product. Am I making a fair assumption?

Any help is much appreciated!