I have a DQN agent, that receives a state composed of a numerical value indicating its position and a 2D array denoting the requests from a number of users.

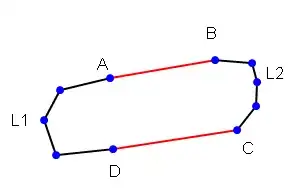

My attempt of architecting the neural network was as described here.

The issue now is in the model.predict() method, it is written like this:

target_f = self.model.predict(state)

in the method:

def replay(self, batch_size): # method that trains NN with experiences sampled from memory

minibatch = sample(self.memory, batch_size)

for state, action, reward, next_state, done in minibatch:

target = reward

if not done:

target = (reward + self.gamma * np.amax(self.model.predict(next_state)[0]))

target_f = self.model.predict(state)

target_f[0][action] = target

self.model.fit(state, target_f, epochs=1, verbose=0)

if self.epsilon > self.epsilon_min:

self.epsilon *= self.epsilon_decay

Where the state can be (agentPosition, [[1, 0, 0], [0, 0, 0], [0, 1, 0], ...])

The shape of the state is (2, (11, 3)) if I have 11 users for example (which equals the number of rows in the 2D array of requests).

The error says:

ValueError: Failed to find data adapter that can handle input: (<class 'list'> containing values of types {"<class 'numpy.int32'>", '(<class \'list\'> containing values of types {"<class \'numpy.ndarray\'>"})'}), <class 'NoneType'>

If instead I write it like this:

target_f = target_f = self.model.predict(np.array([state[0], state[1]]))

The error is then different:

ValueError: Error when checking model input: the list of Numpy arrays that you are passing to your model is not the size the model expected. Expected to see 2 array(s), for inputs ['dense_63_input', 'input_20'] but instead got the following list of 1 arrays: [array([[54],

[((1, 0, 0), (0, 0, 0), (0, 0, 1), (0, 0, 1), (0, 0, 0), (0, 1, 0), (0, 0, 1), (0, 0, 1), (1, 0, 0), (1, 0, 0), (0, 0, 1))]],

dtype=object)]...

Edit: I did as indicated in the accepted solution, and I get this error:

IndexError Traceback (most recent call last)

<ipython-input-25-e99a46675915> in <module>

28

29 if len(agent.memory) > batch_size:

---> 30 agent.replay(batch_size) # train the agent by replaying the experiences of the episode

31

32 if e % 100 == 0:

<ipython-input-23-91f0ef2e2650> in replay(self, batch_size)

129 np.array(next_state[1]) ])[0])) # (maximum target Q based on future action a')

130 target_f = self.model.predict( [ np.array(state[0]), \

--> 131 np.array(state[1]) ] ) # approximately map current state to future discounted reward

132 target_f[0][action] = target

133 self.model.fit(state, target_f, epochs=1, verbose=0)

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training.py in predict(self, x, batch_size, verbose, steps, callbacks, max_queue_size, workers, use_multiprocessing)

1011 max_queue_size=max_queue_size,

1012 workers=workers,

-> 1013 use_multiprocessing=use_multiprocessing)

1014

1015 def reset_metrics(self):

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_v2.py in predict(self, model, x, batch_size, verbose, steps, callbacks, max_queue_size, workers, use_multiprocessing, **kwargs)

496 model, ModeKeys.PREDICT, x=x, batch_size=batch_size, verbose=verbose,

497 steps=steps, callbacks=callbacks, max_queue_size=max_queue_size,

--> 498 workers=workers, use_multiprocessing=use_multiprocessing, **kwargs)

499

500

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_v2.py in _model_iteration(self, model, mode, x, y, batch_size, verbose, sample_weight, steps, callbacks, max_queue_size, workers, use_multiprocessing, **kwargs)

408 strategy = _get_distribution_strategy(model)

409 batch_size, steps = dist_utils.process_batch_and_step_size(

--> 410 strategy, x, batch_size, steps, mode)

411 dist_utils.validate_callbacks(input_callbacks=callbacks,

412 optimizer=model.optimizer)

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\distribute\distributed_training_utils.py in process_batch_and_step_size(strategy, inputs, batch_size, steps_per_epoch, mode, validation_split)

460 first_x_value = nest.flatten(inputs)[0]

461 if isinstance(first_x_value, np.ndarray):

--> 462 num_samples = first_x_value.shape[0]

463 if validation_split and 0. < validation_split < 1.:

464 num_samples = int(num_samples * (1 - validation_split))

IndexError: tuple index out of range

The state[1] is a tuple like this ((1, 0, 0), (0, 1, 0), ...)

The shape of np.array(state[0]) is ().

The shape of np.array(state[1]) is (11, 3).

If I write:

self.model.predict( [ np.array(state[0]).reshape(-1,1), np.array(state[1]) ] )

It gives an error:

ValueError Traceback (most recent call last)

<ipython-input-28-e99a46675915> in <module>

28

29 if len(agent.memory) > batch_size:

---> 30 agent.replay(batch_size) # train the agent by replaying the experiences of the episode

31

32 if e % 100 == 0:

<ipython-input-26-5df3ba3feb8f> in replay(self, batch_size)

129 np.array(next_state[1]) ])[0])) # (maximum target Q based on future action a')

130 target_f = self.model.predict( [ np.array(state[0]).reshape(-1, 1), \

--> 131 np.array(state[1]) ] ) # approximately map current state to future discounted reward

132 target_f[0][action] = target

133 self.model.fit(state, target_f, epochs=1, verbose=0)

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training.py in predict(self, x, batch_size, verbose, steps, callbacks, max_queue_size, workers, use_multiprocessing)

1011 max_queue_size=max_queue_size,

1012 workers=workers,

-> 1013 use_multiprocessing=use_multiprocessing)

1014

1015 def reset_metrics(self):

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_v2.py in predict(self, model, x, batch_size, verbose, steps, callbacks, max_queue_size, workers, use_multiprocessing, **kwargs)

496 model, ModeKeys.PREDICT, x=x, batch_size=batch_size, verbose=verbose,

497 steps=steps, callbacks=callbacks, max_queue_size=max_queue_size,

--> 498 workers=workers, use_multiprocessing=use_multiprocessing, **kwargs)

499

500

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_v2.py in _model_iteration(self, model, mode, x, y, batch_size, verbose, sample_weight, steps, callbacks, max_queue_size, workers, use_multiprocessing, **kwargs)

424 max_queue_size=max_queue_size,

425 workers=workers,

--> 426 use_multiprocessing=use_multiprocessing)

427 total_samples = _get_total_number_of_samples(adapter)

428 use_sample = total_samples is not None

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_v2.py in _process_inputs(model, mode, x, y, batch_size, epochs, sample_weights, class_weights, shuffle, steps, distribution_strategy, max_queue_size, workers, use_multiprocessing)

644 standardize_function = None

645 x, y, sample_weights = standardize(

--> 646 x, y, sample_weight=sample_weights)

647 elif adapter_cls is data_adapter.ListsOfScalarsDataAdapter:

648 standardize_function = standardize

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training.py in _standardize_user_data(self, x, y, sample_weight, class_weight, batch_size, check_steps, steps_name, steps, validation_split, shuffle, extract_tensors_from_dataset)

2381 is_dataset=is_dataset,

2382 class_weight=class_weight,

-> 2383 batch_size=batch_size)

2384

2385 def _standardize_tensors(self, x, y, sample_weight, run_eagerly, dict_inputs,

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training.py in _standardize_tensors(self, x, y, sample_weight, run_eagerly, dict_inputs, is_dataset, class_weight, batch_size)

2408 feed_input_shapes,

2409 check_batch_axis=False, # Don't enforce the batch size.

-> 2410 exception_prefix='input')

2411

2412 # Get typespecs for the input data and sanitize it if necessary.

C:\Anaconda\envs\tensorflow\lib\site-packages\tensorflow_core\python\keras\engine\training_utils.py in standardize_input_data(data, names, shapes, check_batch_axis, exception_prefix)

571 ': expected ' + names[i] + ' to have ' +

572 str(len(shape)) + ' dimensions, but got array '

--> 573 'with shape ' + str(data_shape))

574 if not check_batch_axis:

575 data_shape = data_shape[1:]

ValueError: Error when checking input: expected dense_42_input to have 3 dimensions, but got array with shape (1, 1)