In my understanding columnar format is better for MapReduce tasks. Even for something like selection of some columns, columnar works well as we don't have to load other columns into memory.

But in Spark 3.0 I'm seeing this ColumnarToRow operation being applied in the query plans which from what I could understand from the docs converts the data into row format.

How is it more efficient than the columnar representation, what are the insights that govern application of this rule?

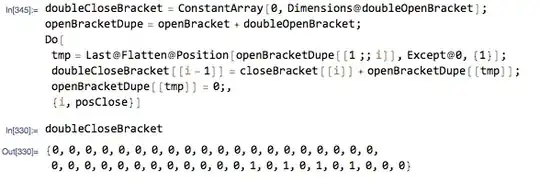

For the following code I've attached the query plan.

import pandas as pd

df = pd.DataFrame({

'a': [i for i in range(2000)],

'b': [i for i in reversed(range(2000))],

})

df = spark.createDataFrame(df)

df.cache()

df.select('a').filter('a > 500').show()