I will give you two suggestions here for how to find the centroids of these disks, you can pick one depending on the level of precision you need.

First of all, using contours is not the best method. Contours depend a lot on which pixels happen to fall within the object on thresholding, noise affects these a lot.

A better method is to find the center of mass (or rather, the first order moments) of the disks. Read Wikipedia to learn more about moments in image analysis. One nice thing about moments is that we can use pixel values as weights, increasing precision.

You can compute the moments of a binary shape from its contours, but you cannot use image intensities in this case. OpenCV has a function cv::moments that computes the moments for the whole image, but I don't know of a function that can do this for each object separately. So instead I'll be using DIPlib for these computations (I'm an author).

Regarding the filtering:

Any well-behaved linear smoothing should not affect the center of mass of the objects, as long as the objects are far enough from the image edge. Being close to the edge will cause the blur to do something different on the side of the object closest to the edge compared to the other sides, introducing a bias.

Any non-linear smoothing filter has the ability to change the center of mass. Please avoid the median filter.

So, I recommend that you use a Gaussian filter, which is the most well-behaved linear smoothing filter.

Method 1: use binary shape's moments:

First I'm going to threshold without any form of blurring.

import diplib as dip

a = dip.ImageRead('/Users/cris/Downloads/Ef8ey.png')

a = a(1) # Use green channel only, simple way to convert to gray scale

_, t = dip.Threshold(a)

b = a<t

m = dip.Label(b)

msr = dip.MeasurementTool.Measure(m, None, ['Center'])

print(msr)

This outputs

| Center |

- | ----------------------- |

| dim0 | dim1 |

| (px) | (px) |

- | ---------- | ---------- |

1 | 18.68 | 9.234 |

2 | 68.00 | 14.26 |

3 | 19.49 | 48.22 |

4 | 59.68 | 52.42 |

We can now apply a smoothing to the input image a and compute again:

a = dip.Gauss(a,2)

_, t = dip.Threshold(a)

b = a<t

m = dip.Label(b)

msr = dip.MeasurementTool.Measure(m, None, ['Center'])

print(msr)

| Center |

- | ----------------------- |

| dim0 | dim1 |

| (px) | (px) |

- | ---------- | ---------- |

1 | 18.82 | 9.177 |

2 | 67.74 | 14.27 |

3 | 19.51 | 47.95 |

4 | 59.89 | 52.39 |

You can see there's some small change in the centroids.

Method 2: use gray scale moments:

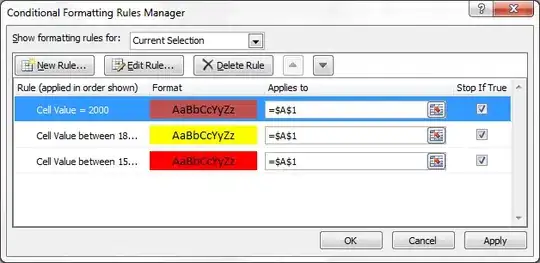

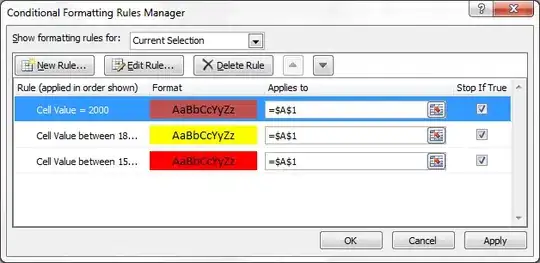

Here we use the error function to apply a pseudo-threshold to the image. What this does is set object pixels to 1 and background pixels to 0, but pixels around the edges retain some intermediate value. Some people refer to this as a "fuzzy thresholding". These two images show the normal ("hard") threshold, and the error function clip ("fuzzy threshold"):

By using this fuzzy threshold, we retain more information about the exact (sub-pixel) location of the edges, which we can use when computing the first order moments.

import diplib as dip

a = dip.ImageRead('/Users/cris/Downloads/Ef8ey.png')

a = a(1) # Use green channel only, simple way to convert to gray scale

_, t = dip.Threshold(a)

c = dip.ContrastStretch(-dip.ErfClip(a, t, 30))

m = dip.Label(a<t)

m = dip.GrowRegions(m, None, -2, 2)

msr = dip.MeasurementTool.Measure(m, c, ['Gravity'])

print(msr)

This outputs

| Gravity |

- | ----------------------- |

| dim0 | dim1 |

| (px) | (px) |

- | ---------- | ---------- |

1 | 18.75 | 9.138 |

2 | 67.89 | 14.22 |

3 | 19.50 | 48.02 |

4 | 59.79 | 52.38 |

We can now apply a smoothing to the input image a and compute again:

a = dip.Gauss(a,2)

_, t = dip.Threshold(a)

c = dip.ContrastStretch(-dip.ErfClip(a, t, 30))

m = dip.Label(a<t)

m = dip.GrowRegions(m, None, -2, 2)

msr = dip.MeasurementTool.Measure(m, c, ['Gravity'])

print(msr)

| Gravity |

- | ----------------------- |

| dim0 | dim1 |

| (px) | (px) |

- | ---------- | ---------- |

1 | 18.76 | 9.094 |

2 | 67.87 | 14.19 |

3 | 19.50 | 48.00 |

4 | 59.81 | 52.39 |

You can see the differences are smaller this time, because the measurement is more precise.

In the binary case, the differences in centroids with and without smoothing are:

array([[ 0.14768417, -0.05677508],

[-0.256 , 0.01668085],

[ 0.02071882, -0.27547569],

[ 0.2137167 , -0.03472741]])

In the gray-scale case, the differences are:

array([[ 0.01277204, -0.04444567],

[-0.02842993, -0.0276569 ],

[-0.00023144, -0.01711335],

[ 0.01776011, 0.01123299]])

If the centroid measurement is given in µm rather than px, it is because your image file contains pixel size information. The measurement function will use this to give you real-world measurements (the centroid coordinate is w.r.t. the top-left pixel). If you do not desire this, you can reset the image's pixel size:

a.SetPixelSize(1)

The two methods in C++

This is a translation to C++ of the code above, including a display step to double-check that the thresholding produced the right result:

#include "diplib.h"

#include "dipviewer.h"

#include "diplib/simple_file_io.h"

#include "diplib/linear.h" // for dip::Gauss()

#include "diplib/segmentation.h" // for dip::Threshold()

#include "diplib/regions.h" // for dip::Label()

#include "diplib/measurement.h"

#include "diplib/mapping.h" // for dip::ContrastStretch() and dip::ErfClip()

int main() {

auto a = dip::ImageRead("/Users/cris/Downloads/Ef8ey.png");

a = a[1]; // Use green channel only, simple way to convert to gray scale

dip::Gauss(a, a, {2});

dip::Image b;

double t = dip::Threshold(a, b);

b = a < t; // Or: dip::Invert(b,b);

dip::viewer::Show(a);

dip::viewer::Show(b); // Verify that the segmentation is correct

dip::viewer::Spin();

auto m = dip::Label(b);

dip::MeasurementTool measurementTool;

auto msr = measurementTool.Measure(m, {}, { "Center"});

std::cout << msr << '\n';

auto c = dip::ContrastStretch(-dip::ErfClip(a, t, 30));

dip::GrowRegions(m, {}, m, -2, 2);

msr = measurementTool.Measure(m, c, {"Gravity"});

std::cout << msr << '\n';

// Iterate through the measurement structure:

auto it = msr["Gravity"].FirstObject();

do {

std::cout << "Centroid coordinates = " << it[0] << ", " << it[1] << '\n';

} while(++it);

}