I am having a problem using the steps_per_epoch parameter in model.fit. Up to my knowledge, the steps_per_epoch is used to log metrics, like a loss function, after a number of iterations, i.e., after feeding a number of batches to the model. Consequently, while training, we're able to see the loss after few iterations, instead of having to wait until the end of the epoch for the loss to be displayed. Consequently, to be, if I'm having a small data, then my model will learn a pattern just from few epochs. Consequently, I am interested to see the behavior of the model after n iterations.

First, please note that I have tensorflow version 2.3.0

Here is the data preprocessing logic (this is customized for time series data).

window_size = 42

batch_size = 32

forecast_period = 3

def _sub_to_batch(sub):

return sub.batch(window_size, drop_remainder=True)

def _return_input_output(tensor):

_input = tensor[:, :-forecast_period, :]

_output = tensor[:, forecast_period:, :]

return _input, _output

def _reshape_tensor(tensor):

tensor = tf.expand_dims(tensor, axis=-1)

tensor = tf.transpose(tensor, [1, 0, 2])

return tensor

# total elements after unbatch(): 3813

train_ts_dataset = tf.data.Dataset.from_tensor_slices(train_ts)\

.window(window_size, shift=1)\

.flat_map(_sub_to_batch)\

.map(_reshape_tensor)\

.unbatch().shuffle(buffer_size=500).batch(batch_size)\

.map(_return_input_output)

valid_ts_dataset = tf.data.Dataset.from_tensor_slices(valid_ts)\

.window(window_size, shift=1)\

.flat_map(_sub_to_batch)\

.map(_reshape_tensor)\

.unbatch().shuffle(buffer_size=500).batch(batch_size)\

.map(_return_input_output)

for i, (x, y) in enumerate(train_ts_dataset):

print(i, x.numpy().shape, y.numpy().shape)

And the output:

0 (32, 39, 1) (32, 39, 1)

1 (32, 39, 1) (32, 39, 1)

2 (32, 39, 1) (32, 39, 1)

3 (32, 39, 1) (32, 39, 1)

4 (32, 39, 1) (32, 39, 1)

5 (32, 39, 1) (32, 39, 1)

6 (32, 39, 1) (32, 39, 1)

7 (32, 39, 1) (32, 39, 1)

8 (32, 39, 1) (32, 39, 1)

9 (32, 39, 1) (32, 39, 1)

10 (32, 39, 1) (32, 39, 1)

11 (32, 39, 1) (32, 39, 1)

12 (32, 39, 1) (32, 39, 1)

13 (32, 39, 1) (32, 39, 1)

14 (32, 39, 1) (32, 39, 1)

15 (32, 39, 1) (32, 39, 1)

16 (32, 39, 1) (32, 39, 1)

17 (32, 39, 1) (32, 39, 1)

18 (32, 39, 1) (32, 39, 1)

19 (32, 39, 1) (32, 39, 1)

20 (32, 39, 1) (32, 39, 1)

21 (32, 39, 1) (32, 39, 1)

22 (32, 39, 1) (32, 39, 1)

23 (32, 39, 1) (32, 39, 1)

24 (32, 39, 1) (32, 39, 1)

25 (32, 39, 1) (32, 39, 1)

26 (29, 39, 1) (29, 39, 1)

Therefore, I can tell that my dataset contains a total of 27 batches, each batch contains 29-32 elements of shape (32, 39, 1), (32, 39, 1).

As for the model:

import datetime

tf.keras.backend.clear_session()

time_steps = x_train.shape[1]

def _custom_mae(y_pred, y_true):

_y_pred = y_pred[-forecast_period:, :]

_y_true = y_true[-forecast_period:, :]

mae = tf.losses.MAE(_y_true, _y_pred)

return mae

model = k.models.Sequential([

# k.layers.Bidirectional(k.layers.LSTM(units=100, return_sequences=True), input_shape=(None, 1)),

k.layers.Conv1D(256, 16, strides=1, padding='same', input_shape=(None, 1)),

k.layers.Conv1D(128, 16, strides=1, padding='same'),

k.layers.TimeDistributed(k.layers.Dense(1))

])

_datetime = datetime.datetime.now().strftime("%Y%m%d-%H-%M-%S")

_log_dir = os.path.join(".", "logs", "fit4", _datetime)

tensorboard_cb = k.callbacks.TensorBoard(log_dir=_log_dir)

model.compile(loss="mae", optimizer=tf.optimizers.Adam(learning_rate=0.01), metrics=[_custom_mae])

# , validation_data=valid_ts_dataset

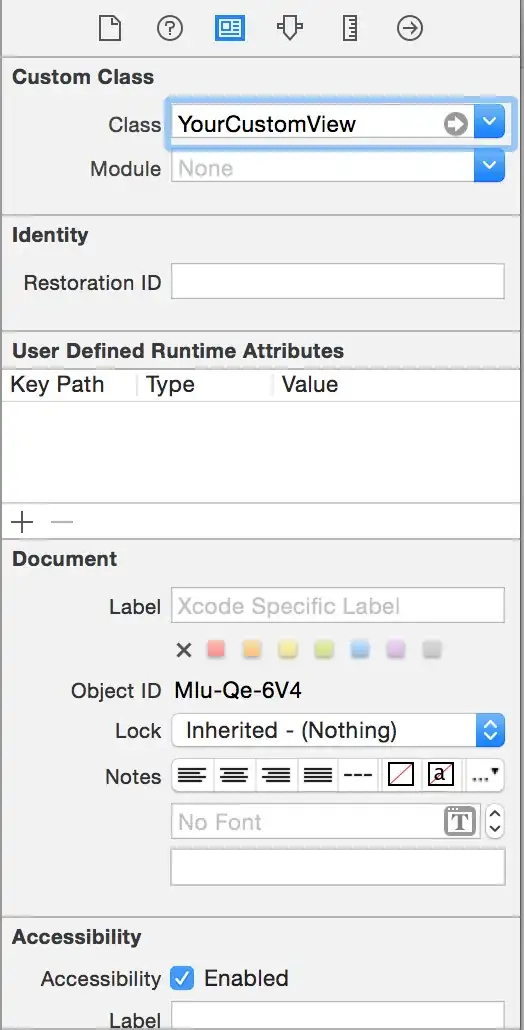

history = model.fit(train_ts_dataset, steps_per_epoch=12, epochs=1, callbacks=[tensorboard_cb])

I got the following output:

So, I can tell that I had 12 virtual epochs within a real epoch.

However, after looking into history.history I got the following:

However, I'm expecting to see a list of 12 values for each the loss and the _custom_mae

Finally, I would love to see the results in tensorboard.

Please let me know what I am missing and how to tackle my problem.

Thanks in advance for the support!