Example

sameer/student/land/compressed files sameer/student/pro/uncompressed files

sameer/employee/land/compressed files sameer/employee/pro/uncompressed files

In the above example I need to read files from all LAND folders present in different sub directories and need to process them and place them in PRO folders with in same sub folders.

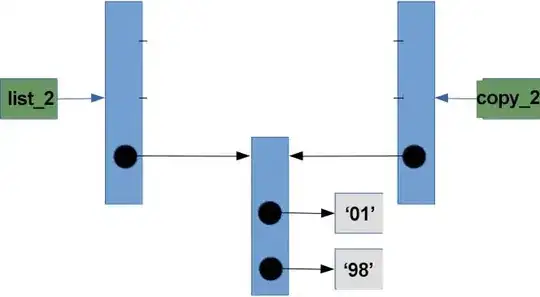

For this I have taken two GCS nodes one from source and another from sink.

in the GCS source i have provided path gs://sameer/ , it is reading files from all sub folders and merging them into one file placing it in sink path.

Excepted output all files should be placed in sub directories where i have fetched from.

It can achieve the excepted output by running pipeline separately for each folder

I am expecting is this can be possible by a single pipeline run