I am new to machine learning and using GPU - for that reason I was excited about RAPIDs and dask.

I am running on an AWS EC2 p3.8xlarge. On it I am running docker with the RAPIDs container. I am using version 0.16. There is an EBS with 60GB.

I have a data set with about 80 million records. As csv it is about 27GB and as parquet (with a little less features) it is 3.4GB (both cases on AWS S3).

Trying to use dask_cudf using a LocalCUDACluster, I always encounter and issue with crashing workers. Core dumps are created and the execution continues, creating new workers and eventually taking all the storage on my machine.

See below some examples execution showing memory going up, not respecting rmm_pool_size and eventually crashing. I tried many values for rmm_pool_size, both over and under the total GPU memory (from what I understand, it should be able to spill to machine memory).

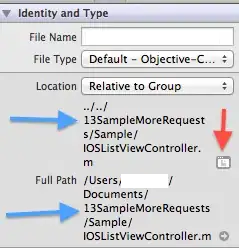

I am using the following initial code:

from dask_cuda import LocalCUDACluster

from distributed import Client, LocalCluster

import dask_cudf

cluster = LocalCUDACluster(

rmm_pool_size="60GB" # I've tried 64, 100, 150 etc. No luck

)

# I also tried setting rmm_managed_memory...

# I know there are other parameters (ucx, etc) but don't know whether relevant and how to use

client = Client(cluster)

df = dask_cudf.read_parquet("s3://my-bucket/my-parquet-dir/")

I print memory usage:

mem = df.memory_usage().compute()

print(f"total dataset memory: {mem.sum() / 1024**3}GB")

Resulting in

total dataset memory: 50.736539436504245GB

Then, executing my code (whether it is trying to do some EDA, running KNN, or pretty much everything else, I am getting this behavior / error.

I read the docs, I read numerous blogs (from RAPIDS mainly), I run through the notebooks but I am still not able to get it to work. Am I doing something wrong? Will this not work with the setup I have?

Any help would be appreciated...