The images are loaded dynamically, so you have to use selenium to scrape them. Here is the full code to do it:

from selenium import webdriver

import time

import requests

driver = webdriver.Chrome()

driver.get('https://www.pokemon.com/us/pokedex/')

time.sleep(4)

li_tags = driver.find_elements_by_class_name('animating')[:-3]

li_num = 1

for li in li_tags:

img_link = li.find_element_by_xpath('.//img').get_attribute('src')

name = li.find_element_by_xpath(f'/html/body/div[4]/section[5]/ul/li[{li_num}]/div/h5').text

r = requests.get(img_link)

with open(f"D:\\{name}.png", "wb") as f:

f.write(r.content)

li_num += 1

driver.close()

Output:

12 pokemon images. Here are the first 2 images:

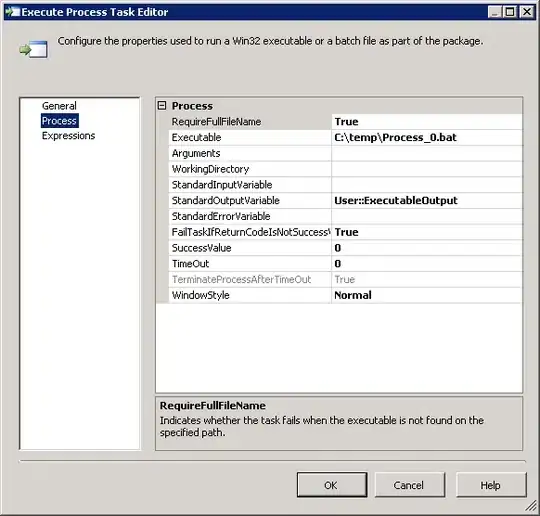

Image 1:

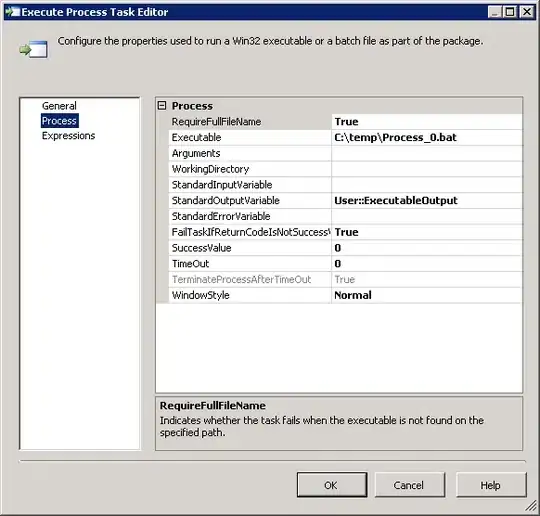

Image 2:

Plus, what I noticed was that there was a load more button at the bottom of the page. When clicked, it loads more images. We have to keep scrolling down after clicking the load more button to load more images. If I am not wrong, there is a total of 893 images on the website. In order to scrape all 893 images, you can use this code:

from selenium import webdriver

import time

import requests

driver = webdriver.Chrome()

driver.get('https://www.pokemon.com/us/pokedex/')

time.sleep(3)

load_more = driver.find_element_by_xpath('//*[@id="loadMore"]')

driver.execute_script("arguments[0].click();",load_more)

lenOfPage = driver.execute_script("window.scrollTo(0, document.body.scrollHeight);var lenOfPage=document.body.scrollHeight;return lenOfPage;")

match=False

while(match==False):

lastCount = lenOfPage

time.sleep(1.5)

lenOfPage = driver.execute_script("window.scrollTo(0, document.body.scrollHeight);var lenOfPage=document.body.scrollHeight;return lenOfPage;")

if lastCount==lenOfPage:

match=True

li_tags = driver.find_elements_by_class_name('animating')[:-3]

li_num = 1

for li in li_tags:

img_link = li.find_element_by_xpath('.//img').get_attribute('src')

name = li.find_element_by_xpath(f'/html/body/div[4]/section[5]/ul/li[{li_num}]/div/h5').text

r = requests.get(img_link)

with open(f"D:\\{name}.png", "wb") as f:

f.write(r.content)

li_num += 1

driver.close()