I have written the following code to find the maximum temperature but when I am trying to retrieve the output, the files are created but are empty. I don't really understand why is this happening...Can someone please just help?

My runner code:

import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.FileInputFormat;

import org.apache.hadoop.mapred.FileOutputFormat;

import org.apache.hadoop.mapred.JobClient;

import org.apache.hadoop.mapred.JobConf;

import org.apache.hadoop.mapred.TextInputFormat;

import org.apache.hadoop.mapred.TextOutputFormat;

public class MaxTemp {

public static void main(String[] args) throws IOException {

JobConf conf = new JobConf(MaxTemp.class);

conf.setJobName("MaxTemp1");

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(MaxTempMapper.class);

conf.setCombinerClass(MaxTempReducer.class);

conf.setReducerClass(MaxTempReducer.class);

FileInputFormat.setInputPaths(conf,new Path(args[0]));

FileOutputFormat.setOutputPath(conf,new Path(args[1]));

JobClient.runJob(conf);

}

}

Mapper code:

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.MapReduceBase;

import org.apache.hadoop.mapred.Mapper;

import org.apache.hadoop.mapred.OutputCollector;

import org.apache.hadoop.mapred.Reporter;

public class MaxTempMapper extends MapReduceBase implements Mapper<LongWritable,Text,Text,IntWritable> {

public void map(LongWritable key, Text value, OutputCollector<Text,IntWritable> output, Reporter reporter) throws IOException {

String record = value.toString();

String[] parts = record.split(",");

output.collect(new Text(parts[0]), new IntWritable(Integer.parseInt(parts[1])));

}

}

My reducer code:

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.MapReduceBase;

import org.apache.hadoop.mapred.OutputCollector;

import org.apache.hadoop.mapred.Reducer;

import org.apache.hadoop.mapred.Reporter;

public class MaxTempReducer extends MapReduceBase implements Reducer<Text,IntWritable,Text,IntWritable> {

public void reduce1(Text key, Iterator<IntWritable> values, OutputCollector<Text,IntWritable> output, Reporter reporter) throws IOException {

int maxValue = 0;

while (values.hasNext()) {

maxValue=Math.max(maxValue,values.next().get());

}

output.collect(key, new IntWritable(maxValue));

}

@Override

public void reduce(Text arg0, Iterator<IntWritable> arg1, OutputCollector<Text, IntWritable> arg2, Reporter arg3) throws IOException {

// TODO Auto-generated method stub

}

}

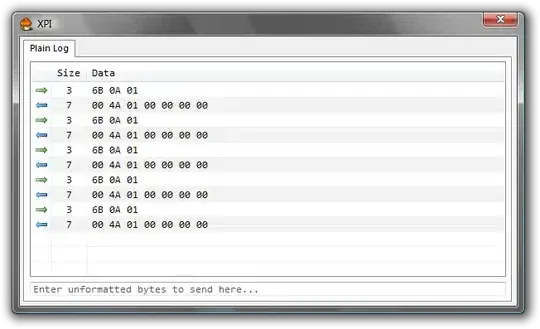

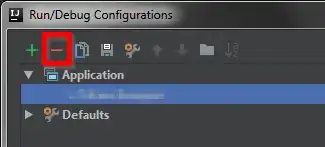

I am attaching the output screenshots