This question has already been addressed but I am not satisfied with the given answers

Reading From Local Folder (3 GB)

Reading From HDFS Folder (3 GB)

As you can see, the longer lasting job (1) is taking sensibly the same processing time. This is pretty counter-intuitive indeed. You would of course expect the distributed files setup to run significantly faster that a local folder

I am using an AWS EMR Cluster (Last version as of posting date)

I would like to have concrete specific insights to look at precisely for my benchmark and not only very general pointing to references to spark theory, architecture, & setting.

EDIT

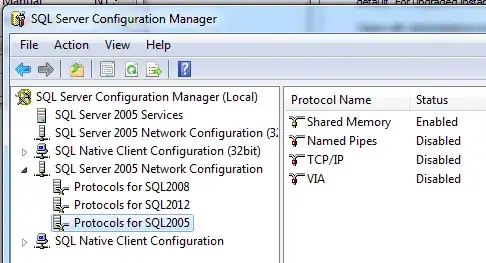

Here is the file information on HDFS I have 12 of these (One per month), split in two blocks. From my understanding, as I have 2 datanodes, the files are split over these two datanodes (that correspond also to Spark Worker Cores)

Thanks in advance.