Somehow as a follow up on the question Creating confidence intervals for regression curve in GLMM using Bootstrapping, I am interested in getting the correct values of a regression curve and the associated confidence interval curves.

Consider a case where in a GLMM, there is one response variable, two continuous fixed effects and one random effect. Here is some fake data:

library (dplyr)

set.seed (1129)

x1 <- runif(100,0,1)

x2 <- rnorm(100,0.5,0.4)

f1 <- gl(n = 5,k = 20)

rnd1<-rnorm(5,0.5,0.1)

my_data <- data.frame(x1=x1, x2=x2, f1=f1)

modmat <- model.matrix(~x1+x2, my_data)

fixed <- c(-0.12,0.35,0.09)

y <- (modmat%*%fixed+rnd1)

my_data$y <- ((y - min (y))/max(y- min (y))) %>% round (digits = 1)

rm (y)

The GLMM that I fit looks like this:

m1<-glmer (y ~x1+x2+(1|f1), my_data, family="binomial")

summary (m1)

Generalized linear mixed model fit by maximum likelihood (Laplace Approximation) ['glmerMod'] Family: binomial ( logit ) Formula: y ~ x1 + x2 + (1 | f1) Data: my_data AIC BIC logLik deviance df.resid 65.7 76.1 -28.8 57.7 96 Scaled residuals: Min 1Q Median 3Q Max -8.4750 -0.7042 -0.0102 1.5904 14.5919 Random effects: Groups Name Variance Std.Dev. f1 (Intercept) 1.996e-10 1.413e-05 Number of obs: 100, groups: f1, 5 Fixed effects: Estimate Std. Error z value Pr(>|z|) (Intercept) -9.668 2.051 -4.713 2.44e-06 *** x1 12.855 2.659 4.835 1.33e-06 *** x2 4.875 1.278 3.816 0.000136 *** --- Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Correlation of Fixed Effects: (Intr) x1 x1 -0.970 x2 -0.836 0.734 convergence code: 0 boundary (singular) fit: see ?isSingular

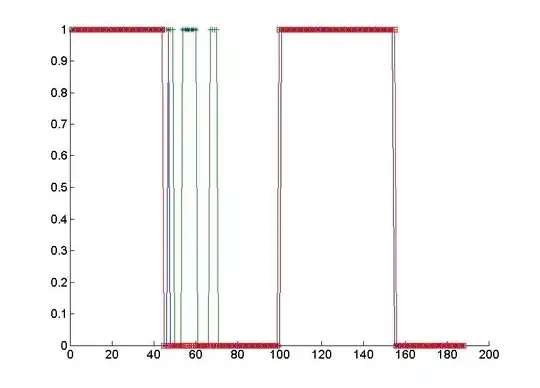

Plotting y vs x1:

plot (y~x1, my_data)

It should be possible to get a regression curve from the summary of m1. I have learned that I need to reverse the link-function (in this case, "logit"):

y = 1/(1+exp(-(Intercept+b*x1+c*x2)))

In order to plot a regression curve of x1 in a two-dimensional space, I set x2 = mean(x2) in the formula (which also seems important - the red line in the following plots ignores x2, apparently leading to considerable bias). The regression line:

xx <- seq (from = 0, to = 1, length.out = 100)

yy <- 1/(1+exp(-(-9.668+12.855*xx+4.875*mean(x2))))

yyy <- 1/(1+exp(-(-9.668+12.855*xx)))

lines (yy ~ xx, col = "blue")

lines (yyy~ xx, col = "red")

I think, the blue line looks not so good (and the red line worse, of course). So as a side-question: is y = 1/(1+exp(-(Intercept+b*x1+c*x2))) always the right choice as a back-transformation of the logit-link? I am asking because I found this https://sebastiansauer.github.io/convert_logit2prob/, which made me suspicious. Or is there another reason for the model not to fit so well? Maybe my data creation process is somewhat 'bad'.

What I need now is to add the 95%-confidence interval to the curve. I think that Bootstrapping using the bootMer function should be a good approach. However, all examples that I found were on models with one single fixed effect. @Jamie Murphy asked a similar question, but he was interested in models containing a continuous and a categorical variable as fixed effects here: Creating confidence intervals for regression curve in GLMM using Bootstrapping

But when it comes to models with more than one continuous variables as fixed effects, I get lost. Perhaps someone can help solve this issue - possibly with a modification of the second part of this tutorial: https://www.r-bloggers.com/2015/06/confidence-intervals-for-prediction-in-glmms/