I have a job in AWS Glue that fails with:

An error occurred while calling o567.pyWriteDynamicFrame. Job aborted due to stage failure: Task 168 in stage 31.0 failed 4 times, most recent failure: Lost task 168.3 in stage 31.0 (TID 39474, ip-10-0-32-245.ec2.internal, executor 102): ExecutorLostFailure (executor 102 exited caused by one of the running tasks) Reason: Container killed by YARN for exceeding memory limits. 22.2 GB of 22 GB physical memory used. Consider boosting spark.yarn.executor.memoryOverhead or disabling yarn.nodemanager.vmem-check-enabled because of YARN-4714.

The main message is Container killed by YARN for exceeding memory limits. 22.2 GB of 22 GB physical memory used.

I have used broadcasts for the small dfs and salt technique for bigger tables.

The input consists of 75GB of JSON files to process.

I have used a a grouping of 32MB for the input files:

additional_options={

'groupFiles': 'inPartition',

'groupSize': 1024*1024*32,

},

The output file is written with 256 partitions:

output_df = output_df.coalesce(256)

In AWS Glue I launch the job with 60 G.2X executors = 60 x (8 vCPU, 32 GB of memory, 128 GB disk).

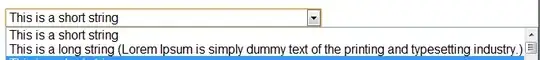

Below is the plot representing the metrics for this job. From that, the data don't look skewed... Am I wrong?

Any advice to successfully run this is welcome!