sorry for the super long question... I had to add enough images to explain my point and demonstrate different edge cases

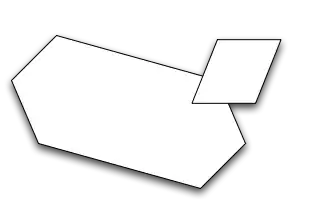

I am trying to detect speed bump signs using OpenCV in Python. The signs look like these:

(these are just examples I downloaded from the Internet the following segmented contours aren't of these images)

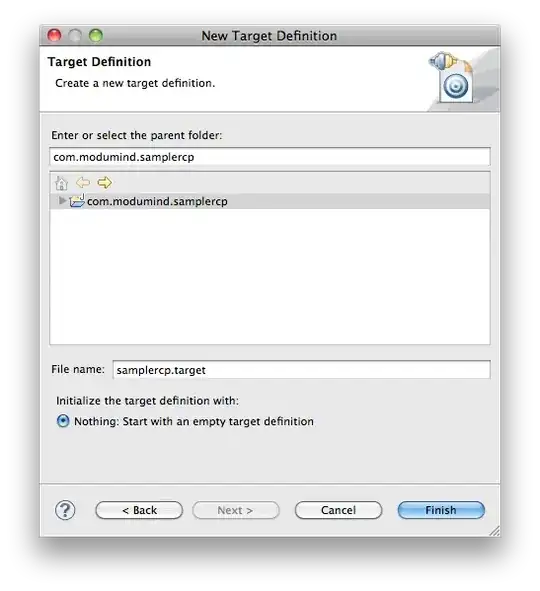

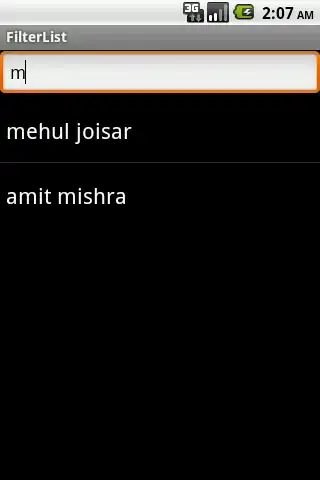

I have managed to extract the internal contours in these signs and they look like these:

Some unwanted triangular signs also pass through my triangle detector too:

and these have extracted contours that look like:

So my aim is to compare the extracted contours with some standard images (that I have saved already). I am using the matchContours function in OpenCV for this purpose.

The standard images that I am using, with whom all the possible images will be compared are (multiple bumps on left and single on right):

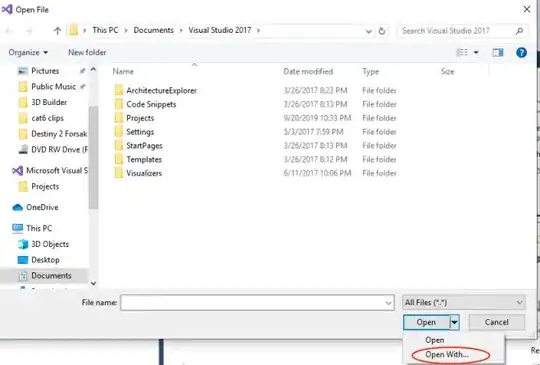

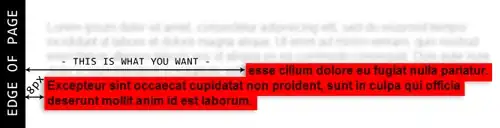

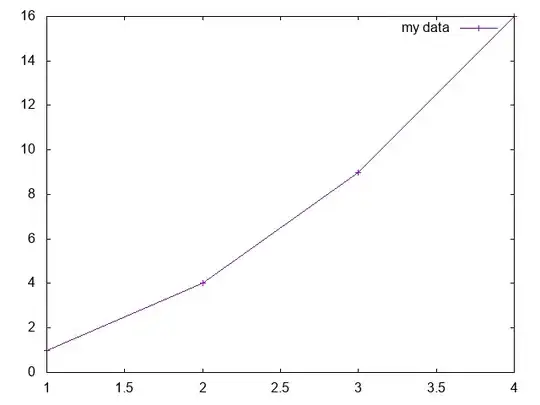

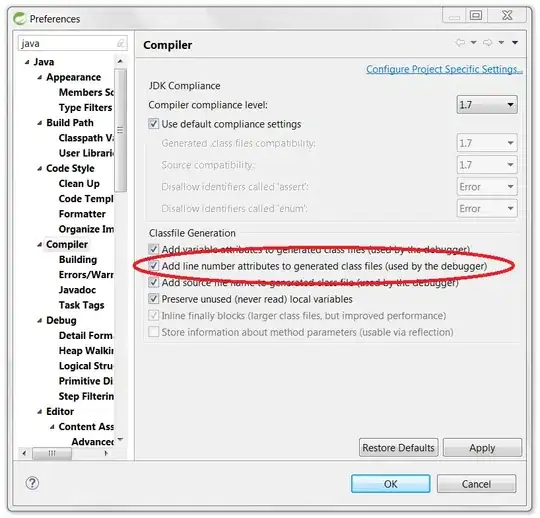

The problem that I am facing is that the output of matchContours is very variable and I can't make sense of it. The following horizontal stacked images depict the target contour (on left) compared to one of the standards shown above (on right) with the lower-left value depicting the output of the following code:

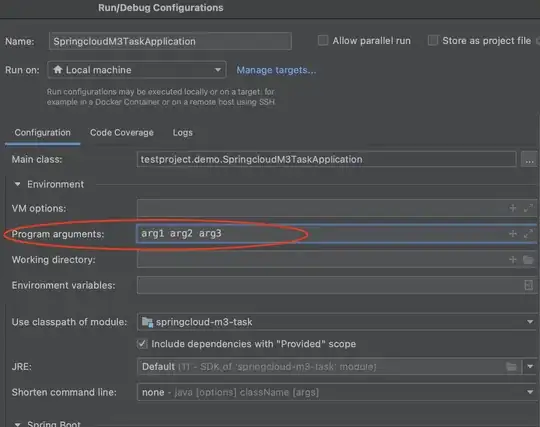

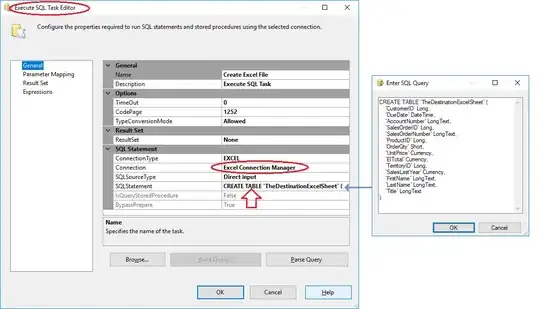

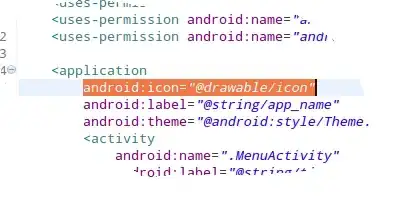

def compare_rois(standard,image):

cnt1,_ = cv2.findContours(standard,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

cnt2,_ = cv2.findContours(image,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

biggest1 = sorted(cnt1,key=cv2.contourArea,reverse=True)[0]

biggest2 = sorted(cnt2,key=cv2.contourArea,reverse=True)[0]

return cv2.matchShapes(biggest1,biggest2,cv2.CONTOURS_MATCH_I1,0.0)

compare_rois(single_bump_template_inverted,image)

compare_rois(multiple_bumps_template_inverted,image)

# not displaying the stacking and adding text

Here are some examples of the output of the above code:

From the above images, I can say that the values (minimum of the 2 comparisons) should range somewhere between 0 and 1.6 for classification as "speed bump detected"...

But the following images contradict this observation. Sometimes miss-detected blobs have values that fall in this range and other times actual 'true' detections fall out of this range and worse of all the shapes that were supposed to be very different from the bumps also make into the range (take the 'arrow' or the 'e' for example):

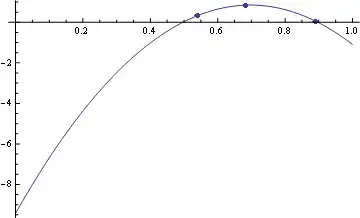

Why is this happening? Please note that the ROI images are all of the different shapes... some are very small and some are large... matchShapes is supposed to be invariant to the shape of the objects and should be able to detect the shape nevertheless, atleast that is this site says.

As mentioned earlier, all 7 Hu Moments are invariant under translations (move in x or y direction), scale and rotation.

So my question is: How can I make sure that the return values that I am getting from matchShapes are in the correct range? Are there any transformations that I can apply to the input images the would "help" matchShapes "see" the difference better?

This SO question is similar to mine and two of the comments under it seem to indicate that some transformations might be required...

matchShapes() uses Hu invariants; descriptions of shapes that are rotation/translation/scale-invariant. Even though your result seems weird, the reality is that coin shape is very ellipsoidal, more so than the shirt, which means that if you blew them up all to be the same size, the ellipse from the coin has more "mass" (area) farther away from the centre than the shirt does. Ergo, the shirt is more similar for

matchShapes()

Solved it, just made a function that stretches the contour to have a square bounding box, so that coins viewed at an angle appear as they would face-on. This could work for more complicated shapes...

I am not sure what OP did in this case and wheather doing the same would help in my case so I asked this question.

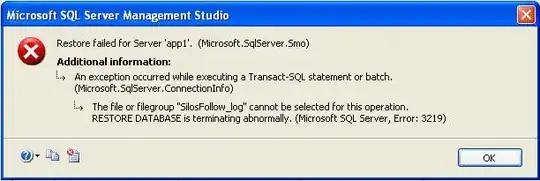

Should I try using the other two implemented methods? CONTOURS_MATCH_I2 and CONTOURS_MATCH_I3? How exactly are they different? The official documentation has only this much to say:

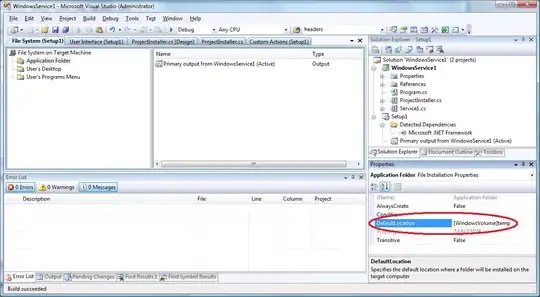

You can download the entire dataset from here. And run the following code in the same working directory as the unzipped "dataset" folder:

import os

import cv2

dataset = "dataset"

folders = os.listdir(dataset)

folders.remove("standard")

single = cv2.imread(os.path.join(dataset,"standard","single.jpg"),0)

multiple = cv2.imread(os.path.join(dataset,"standard","multiple.jpg"),0)

def compare_rois(roi,standard):

cnt1,_ = cv2.findContours(standard,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

cnt2,_ = cv2.findContours(roi,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE)

biggest1 = sorted(cnt1,key=cv2.contourArea,reverse=True)[0]

biggest2 = sorted(cnt2,key=cv2.contourArea,reverse=True)[0]

return cv2.matchShapes(biggest1,biggest2,cv2.CONTOURS_MATCH_I1,0.0)

for folder in folders:

path_to_folder = os.path.join(dataset,folder)

roi = cv2.imread(os.path.join(path_to_folder,"image.jpg"),0)

ret1 = compare_rois(roi,single)

ret2 = compare_rois(roi,multiple)

single_resized = cv2.resize(single ,roi.shape[::-1],interpolation = cv2.INTER_NEAREST)

multiple_resized = cv2.resize(multiple,roi.shape[::-1],interpolation = cv2.INTER_NEAREST)

stacked1 = np.hstack((roi,single_resized))

stacked2 = np.hstack((roi,multiple_resized))

h,w = stacked1.shape[:2]

cv2.putText(stacked1, "{:3f}".format(ret1), (10, h - h//w - 10), 0, 0.5, (255, 255, 255), 1)

h,w = stacked2.shape[:2]

cv2.putText(stacked2, "{:3f}".format(ret2), (10, h - h//w - 10), 0, 0.5, (255, 255, 255), 1)

cv2.imshow("roi",roi)

cv2.imshow("compared with single",stacked1)

cv2.imshow("compared with multiple",stacked2)

if cv2.waitKey(0) == ord('q'):

cv2.destroyAllWindows()