I am transferring and rechunking data from netcdf to zarr. The process is slow and is not using much of the CPUs. I have tried several different configurations, sometimes it seems to do slightly better, but it hasn't worked well. Does anyone have any tips for making this run more efficiently?

The last attempt (and some, perhaps all, of the previous attempts) (with single machine, distributed scheduler and using threads) the logs gave this message:

distributed.core - INFO - Event loop was unresponsive in Worker for 10.05s. This is often caused by long-running GIL-holding functions or moving large chunks of data.

Previously I have had errors with memory getting used up, so I am writing the zarr in pieces, using the "stepwise_to_zarr" function below:

def stepwise_to_zarr(dataset, step_dim, step_size, chunks, out_loc, group):

start = dataset[step_dim].min()

end = dataset[step_dim].max()

iis = np.arange(start, end, step_size)

if end > iis[-1]:

iis = np.append(iis, end)

lon=dataset.get_index(step_dim)

first = True

failures = []

for i in range(1,len(iis)):

lower, upper = (iis[i-1], iis[i])

if upper >= end:

lon_list= [l for l in lon if lower <= l <= upper]

else:

lon_list= [l for l in lon if lower <= l < upper]

sub = dataset.sel(longitude=lon_list)

rechunked_sub = sub.chunk(chunks)

write_sync=zarr.ThreadSynchronizer()

if first:

rechunked_sub.to_zarr(out_loc, group=group,

consolidated=True, synchronizer=write_sync, mode="w")

first = False

else:

rechunked_sub.to_zarr(out_loc, group=group,

consolidated=True, synchronizer=write_sync, append_dim=step_dim)

chunks = {'time':8760, 'latitude':21, 'longitude':20}

ds = xr.open_mfdataset("path to data", parallel=True, combine="by_coords")

stepwise_to_zarr(ds, step_size=10, step_dim="longitude",

chunks=chunks, out_loc="path to output", group="group name")

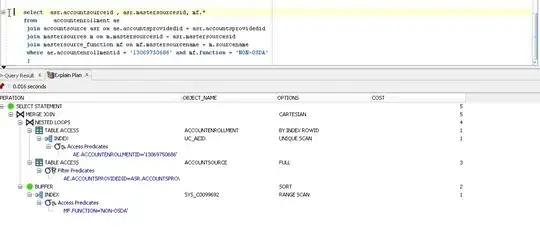

In the plot above, the drop from ~6% utilization to ~0.5% utilization seems to coincide with the first "batch" of 10 degreees latitude being finished.

Background info:

- I am using a single GCE instance of 32 vCPUs and 256 GB memory.

- The data is a about 600 GB and is spread over about 150 netcdf files.

- The data is in GCS and I am using Cloud Storage FUSE to read and write data.

- I am rechunking the data from chunk sizes: {'time':1, 'latitude':521, 'longitude':1440} to chunksizes:{'time':8760, 'latitude':21, 'longitude':20}

I have tried:

- Using the default multiprocessing scheduler

- Using distributed scheduler for single machine (https://docs.dask.org/en/latest/setup/single-distributed.html) both with processes=True and processes=False.

- Both distributed scheduler and the default multiprocessing sceduler while also setting environment variables to avoid oversubscribing threads, like so:

export OMP_NUM_THREADS=1

export MKL_NUM_THREADS=1

export OPENBLAS_NUM_THREADS=1

as described in best practices(https://docs.dask.org/en/latest/array-best-practices.html?highlight=export#avoid-oversubscribing-threads)