I quantize a Keras h5 model (TF 1.13 ; keras_vggface model) with Tensorflow 1.15.3, to use it with an NPU. The code I used for conversion is:

converter = tf.lite.TFLiteConverter.from_keras_model_file(saved_model_dir + modelname)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8 # or tf.uint8

converter.inference_output_type = tf.int8 # or tf.uint8

tflite_quant_model = converter.convert()

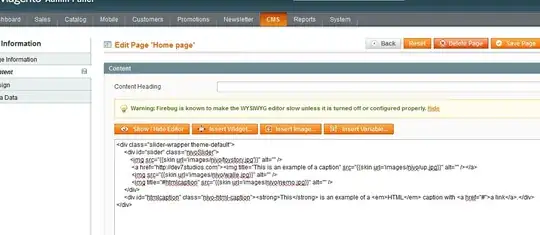

The quantized model I get looks good on first sight. Input type of layers are int8, filter are int8, bias is int32, and output is int8.

However, the model has a quantize layer after the input layer and the input layer is float32 [See image below]. But it seems that the NPU needs also the input to be int8.

Is there a way to fully quantize without a conversion layer but with also int8 as input?

As you see above, I used the :

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

EDIT

SOLUTION from user dtlam

Even though the model still does not run with the google NNAPI, the solution to quantize the model with in and output in int8 using either TF 1.15.3 or TF2.2.0 is, thanks to delan:

...

converter = tf.lite.TFLiteConverter.from_keras_model_file(saved_model_dir + modelname)

def representative_dataset_gen():

for _ in range(10):

pfad='pathtoimage/000001.jpg'

img=cv2.imread(pfad)

img = np.expand_dims(img,0).astype(np.float32)

# Get sample input data as a numpy array in a method of your choosing.

yield [img]

converter.representative_dataset = representative_dataset_gen

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.representative_dataset = representative_dataset_gen

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.experimental_new_converter = True

converter.target_spec.supported_types = [tf.int8]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

quantized_tflite_model = converter.convert()

if tf.__version__.startswith('1.'):

open("test153.tflite", "wb").write(quantized_tflite_model)

if tf.__version__.startswith('2.'):

with open("test220.tflite", 'wb') as f:

f.write(quantized_tflite_model)