I am trying to warp the following image so that I get a fronto-parallel view of the bigger wall, the one which comes on the left side of the image. However, I am not able to decide which points I should give in the function, cv2.getPerspectiveTransform() so that I get the matrix which if applied would yield the desired results.

The code I am using is:

import cv2

import numpy as np

circles = np.zeros((4,2),np.int)

counter = 0

def mousePoints(event,x,y,flags,params):

global counter

if event == cv2.EVENT_LBUTTONDOWN:

circles[counter] = x,y

counter = counter + 1

print(circles)

img = cv2.imread("DSC_0273.JPG")

img = cv2.resize(img,(1500,1000))

q = 0

while True:

if counter == 4:

q = q+1

height1,width1 = 1080,1920

pts1 = np.float32([circles[0],circles[1],circles[2],circles[3]])

width = np.sqrt((circles[1][0] - circles[0][0])**2 + (circles[1][1] - circles[0][1])**2)

height = np.sqrt((circles[2][1] - circles[0][1])**2 + (circles[2][0] - circles[0][0])**2)

width = int(np.round(width))

height = int(np.round(height))

x1,y1 = circles[0]

pts2 = np.float32([[x1,y1],[(x1+width),y1],[(x1+width),(y1+height)],[x1,(y1+height)]])

matrix = cv2.getPerspectiveTransform(pts1,pts2)

if q == 1:

print(matrix.shape)

print(matrix)

imgOutput = cv2.warpPerspective(img,matrix,(width1,height1))

cv2.imshow("Output Image ", imgOutput)

for x in range (0,4):

cv2.circle(img,(circles[x][0],circles[x][1]),3,(0,255,0),cv2.FILLED)

cv2.imshow("Original Image ", img)

cv2.setMouseCallback("Original Image ", mousePoints)

cv2.waitKey(1)

So basically, I click 4 points and my code will find the warping matrix so that the area enclosed within those 4 points are mapped to a rectangle, such that the first point I give is mapped to the same pixel location, and the other 4 points are adjusted so that the enclosed becomes a rectangle. In order to extrapolate this, I apply the same matrix over the whole image.

A set of points(the 4 points to be given through mouse clicks) I tried out is:

[[349, 445], [396, 415],[388, 596], [338, 610]]

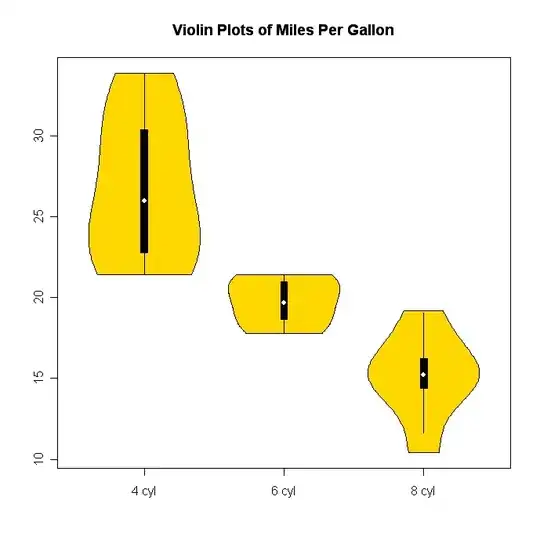

The result I got is: