I'm in the process of completing a TensorFlow tutorial via DataCamp and am transcribing/replicating the code examples I am working through in my own Jupyter notebook.

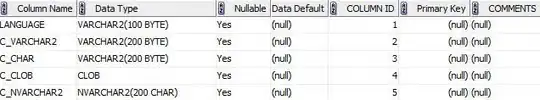

Here are the original instructions from the coding problem :

I'm running the following snippet of code and am not able to arrive at the same result that I am generating within the tutorial, which I have confirmed are the correct values via a connected scatterplot of x vs. loss_function(x) as seen a bit further below.

# imports

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import Variable, keras

def loss_function(x):

import math

return 4.0*math.cos(x-1)+np.divide(math.cos(2.0*math.pi*x),x)

# Initialize x_1 and x_2

x_1 = Variable(6.0, np.float32)

x_2 = Variable(0.3, np.float32)

# Define the optimization operation

opt = keras.optimizers.SGD(learning_rate=0.01)

for j in range(100):

# Perform minimization using the loss function and x_1

opt.minimize(lambda: loss_function(x_1), var_list=[x_1])

# Perform minimization using the loss function and x_2

opt.minimize(lambda: loss_function(x_2), var_list=[x_2])

# Print x_1 and x_2 as numpy arrays

print(x_1.numpy(), x_2.numpy())

I draw a quick connected scatterplot to confirm (successfully) that the loss function that I using gets me back to the same graph provided by the example (seen in screenshot above)

# Generate loss_function(x) values for given range of x-values

losses = []

for p in np.linspace(0.1, 6.0, 60):

losses.append(loss_function(p))

# Define x,y coordinates

x_coordinates = list(np.linspace(0.1, 6.0, 60))

y_coordinates = losses

# Plot

plt.scatter(x_coordinates, y_coordinates)

plt.plot(x_coordinates, y_coordinates)

plt.title('Plot of Input values (x) vs. Losses')

plt.xlabel('x')

plt.ylabel('loss_function(x)')

plt.show()

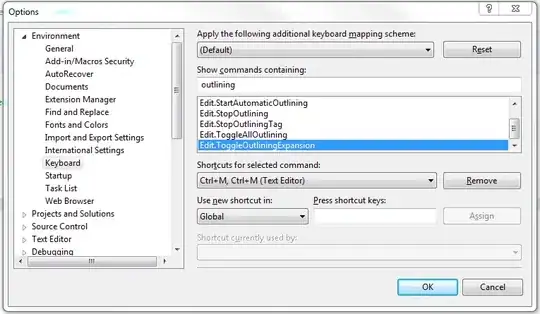

Here are the resulting global and local minima, respectively, as per the DataCamp environment :

4.38 is the correct global minimum, and 0.42 indeed corresponds to the first local minima on the graphs RHS (when starting from x_2 = 0.3)

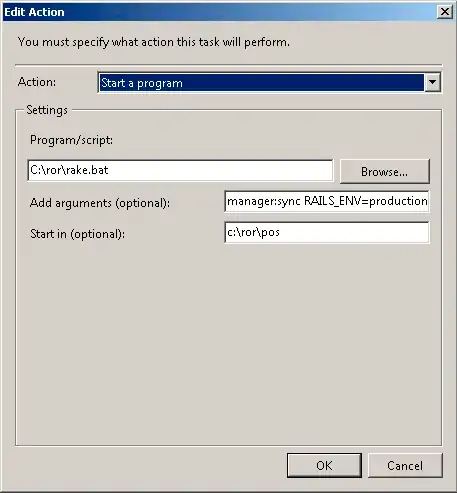

And here are the results from my environment, both of which move opposite the direction that they should be moving towards when seeking to minimize the loss value:

I've spent the better part of the last 90 minutes trying to sort out why my results disagree with those of the DataCamp console / why the optimizer fails to minimize this loss for this simple toy example...?

I appreciate any suggestions that you might have after you've run the provided code in your own environments, many thanks in advance!!!