Any reason why the sum of predicted values and sum of dependent variable is same?

ctl <- c(4.17,5.58,5.18,6.11,4.50,4.61,5.17,4.53,5.33,5.14)

trt <- c(4.81,4.17,4.41,3.59,5.87,3.83,6.03,4.89,4.32,4.69)

group <- gl(2, 10, 20, labels = c("Ctl","Trt"))

weight <- c(ctl*100, trt*20)

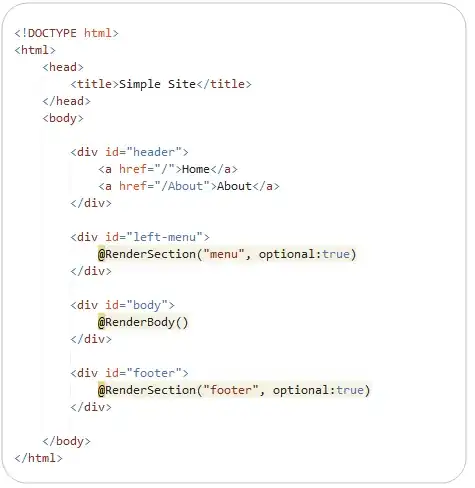

lm.D9 <- glm(weight ~ group,family = gaussian())

summary(lm.D9)

y<-predict(lm.D9,newdata=group,type="response")

sum(weight)

sum(y)

Also the dispersion is also very high (in my actual data). Any leads on how to tackle this? My original data is buidling a model on actual vs expected. I have tried 2 different models,

- Ratio of Actual by Expected as dependent and GLM with gaussian

- Actual - Expected difference as dependent.

But the dispersion in the second case is very high, and both models not validating.

Help appreciated!