the below code transforms a detected 2D-image point to it's 3D location on a defined plane Grid in 3D-world. This mean Z=0, and taking into account that the Extrinsics and Intrinsics are known, we can compute the corresponding 3D_point of the detected 2D-image point:

import cv2

import numpy as np

#load extrinsics & intrinsics

with np.load('parameters_cam1.npz') as X:

mtx, dist = [X[i] for i in ('mtx','dist','rvecs','tvecs')]

with np.load('extrincic.npz') as X:

rvecs1,tvecs1 = [X[i] for i in('rvecs1','tvecs1')]

#prepare rotation matrix

R_mtx, jac=cv2.Rodrigues(rvecs1)

#prepare projection matrix

Extrincic=cv2.hconcat([R_mtx,tvecs1])

Projection_mtx=mtx.dot(Extrincic)

#delete the third column since Z=0

Projection_mtx = np.delete(Projection_mtx, 2, 1)

#finding the inverse of the matrix

Inv_Projection = np.linalg.inv(Projection_mtx)

#detected image point (extracted from a que)

img_point=np.array([pts1_blue[0]])

#adding dimension 1 in order for the math to be correct (homogeneous coordinates)

img_point=np.vstack((img_point,np.array(1)))

#calculating the 3D point which located on the 3D plane

3D_point=Inv_Projection.dot(img_point)

#show results

print('3D_pt_method1\n',3D_point)

#output

3D_pt_method1

[[0.01881387]

[0.0259416 ]

[0.04150276]]

By normalizing the point (dividing by the third value) the result is

`X_World=0.45331611680765327 # 45.3 cm from defined world point cm which is correct

Y_world=0.6250572251098481 # 62.5 cm which is also correct

By evaluating the results, it turns out that they are correct.

I now that we can't retrieve the the Z coordinate of the 3D world point since depth information is lost going from 3d to 2d. The following equation also performs the inverse projection of the 2D point onto 3D world and can be found in all literature, and the result is an equation which represents a line on which the 3D_ world point must lie on

I put the equation 3.15 into code, however without setting Z=0, meaning to say with out deleting the third column of the projection matrix like i did in the previous method (Just as it's written) by doing the following the following:

#inverting the rotation matrix

INV_R=np.linalg.inv(R_mtx)

#inverting the camera matrix

INV_k=np.linalg.inv(mtx)

#multiplying the tow matrices

kinv_Rinv=INV_k.dot(INV_R)

#calcuating the 3D_point X which expressed in eq.3.15

3D_point=kinv_Rinv.dot(img_point)+tvecs1

#print the results

print('3D_pt_method2\n',3D_point)

and the result was

3D_pt_method2 #how should one understand these coordinates ?

[[-9.12505825]

[-5.57152147]

[40.12264881]]

My question is, How should i understand or interpret this result? as it doesn't make any sense compared to the previous method where Z=0. the 3D 3x1 resulted vector seems to give an intuition that it's values represents simply the 3D X, Y and Z of the detected image_point. However, this is not true if we compare X and Y with the previous method!!

So what is literally the difference between 3D_pt_method1 and 3D_pt_method2???

I hope i could express my self and really appreciate helping me understand the difference between the two implementations!

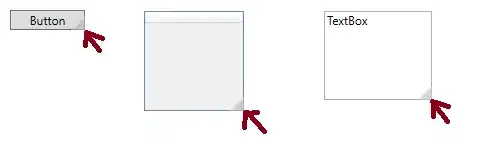

Note: the Grid that represents my defined World-plane and can be seen in the below image in which the distance between every two yellow points is 40 cm

Thanks in advance