I write messages to kafka from csv files. My producer says that all data produced to Kafka topic.

Along with that, I use apache nifi as consumer to kafka topic (ConsumeKafka_2_0 processor).

If I produced data to kafka into one stream - all is OK, but if I tried to use multiple producer with multiple files parallel, I lose a lot of rows.

Core of my producer following:

def produce(for_produce_file):

log.info('Producing started')

avro_schema = avro.schema.Parse(open(config.AVRO_SCHEME_FILE).read())

names_from_schema = [field.name for field in avro_schema.fields]

producer = Producer({'bootstrap.servers': config.KAFKA_BROKERS,

'security.protocol': config.SECURITY_PROTOCOL,

'ssl.ca.location': config.SSL_CAFILE,

'sasl.mechanism': config.SASL_MECHANISM,

'sasl.username': config.SASL_PLAIN_USERNAME,

'sasl.password': config.SASL_PLAIN_PASSWORD,

'queue.buffering.max.messages': 1000000,

'queue.buffering.max.ms': 5000})

try:

file = open(for_produce_file, 'r', encoding='utf-8')

except FileNotFoundError:

log.error(f'File {for_produce_file} not found')

else:

produced_str_count = 0

csv_reader = csv.DictReader(file, delimiter="|", fieldnames=names_from_schema)

log.info(f'File {for_produce_file} opened')

for row in csv_reader:

record = dict(zip(names_from_schema, row.values()))

while True:

try:

producer.produce(config.TOPIC_NAME, json.dumps(record).encode('utf8'))

producer.poll(0)

produced_str_count += 1

break

except BufferError as e:

log.info(e)

producer.poll(5)

except KafkaException as e:

log.error('Kafka error: {}, message was {} bytes'.format(e, sys.getsizeof(json.dumps(record))))

log.error(row)

break

producer.flush()

log.info(f'Producing ended. Produced {produced_str_count} rows')

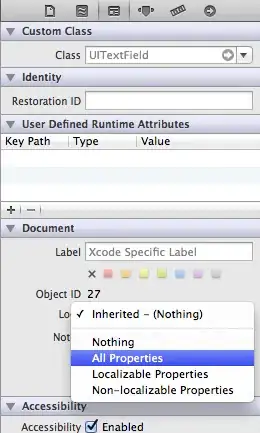

Screenshot from Nifi Processor properties:

Kafka contains 3 nodes, topic replication factor - 3

Maybe the problem is that the producer writes faster than the consumer reads, and the data is deleted when a certain block is overflowed?

Please give me advice.