I have a classification problem where i want to see if a client is defaulter or non defaulter. What is important to me is the probability of default, and how well calibrated the model is so i am doing a grid search with brier score as my scoring method. I am also interested to see if there is a relationship between brier score and roc-auc, so in the grid search i feed these two as parameters.

i then plot the results of grid search against each other

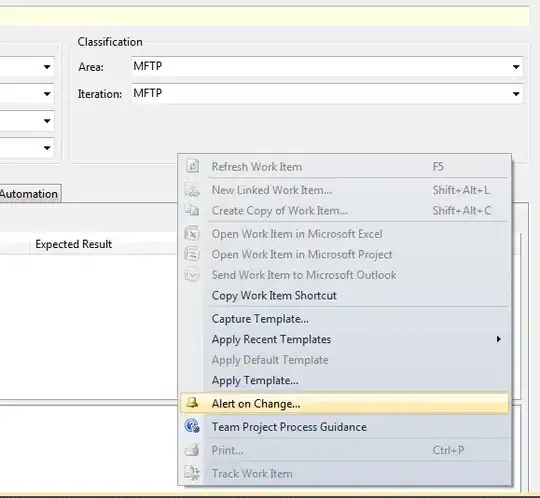

clf = GridSearchCV(pipeline, parameters, cv=kfolds.split(x, y),

scoring=['neg_brier_score','roc_auc'], return_train_score=True, n_jobs=n_jobs,refit='neg_brier_score')

i choose to optimize on brier score from the refit parameter as you can see above. Before when i was using roc-auc if there was a big difference between the validation score and train score from grid search then it would be considered overfitting. Is it the same for brier score too? e.g. my results for both brier score and roc-auc when i plot:

the above is for my brier score using results from grid saeach and below is for my roc-auc:

looking at the brier score plots it doesn't look like it is overfitting. however when you look at the roc-auc plots from gs, it looks like it is. If i am to choose to optimize on brier score like i have done above, how can i tell if over fitting? Is it if there is a big difference in brier score from validation vs train set? how can i also get good accuracy and a well calibrated probabililty.

NOTE: I am using xgboost.