I am training an LSTM to predict a time series. I have tried an encoder-decoder, without any dropout. I divided my data n 70% training and 30% validation. The total points in the training set and validation set are around 107 and 47 respectively. However, the validation loss is always greater than training loss. below is the code.

seed(12346)

tensorflow.random.set_seed(12346)

Lrn_Rate=0.0005

Momentum=0.8

sgd=SGD(lr=Lrn_Rate, decay = 1e-6, momentum=Momentum, nesterov=True)

adam=Adam(lr=Lrn_Rate, beta_1=0.9, beta_2=0.999, amsgrad=False)

optimizernme=sgd

optimizernmestr='sgd'

callbacks= EarlyStopping(monitor='loss',patience=50,restore_best_weights=True)

train_X1 = numpy.reshape(train_X1, (train_X1.shape[0], train_X1.shape[1], 1))

test_X1 = numpy.reshape(test_X1, (test_X1.shape[0], test_X1.shape[1], 1))

train_Y1 = train_Y1.reshape((train_Y1.shape[0], train_Y1.shape[1], 1))

test_Y1= test_Y1.reshape((test_Y1.shape[0], test_Y1.shape[1], 1))

model = Sequential()

Hiddenunits=240

DenseUnits=100

n_features=1

n_timesteps= look_back

model.add(Bidirectional(LSTM(Hiddenunits, activation='relu', return_sequences=True,input_shape=

(n_timesteps, n_features))))#90,120 worked for us uk

model.add(Bidirectional(LSTM( Hiddenunits, activation='relu',return_sequences=False)))

model.add(RepeatVector(1))

model.add(Bidirectional(LSTM( Hiddenunits, activation='relu',return_sequences=True)))

model.add(Bidirectional(LSTM(Hiddenunits, activation='relu', return_sequences=True)))

model.add(TimeDistributed(Dense(DenseUnits, activation='relu')))

model.add(TimeDistributed(Dense(1)))

model.compile(loss='mean_squared_error', optimizer=optimizernme)

history=model.fit(train_X1,train_Y1,validation_data(test_X1,test_Y1),batch_size=batchsize,epochs=250,

callbacks=[callbacks,TqdmCallback(verbose=0)],shuffle=True,verbose=0)

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.title('model loss'+ modelcaption)

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(['train', 'test'], loc='upper left')

plt.show()

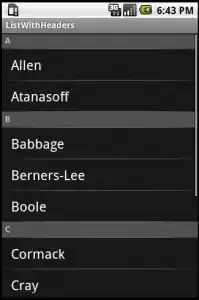

the training loss is coming greater than validation loss. training loss =0.02 and validation loss are approx 0.004 please the attached picture. I tried many things including dropouts and adding more hidden units but it did not solve the problem. Any comments suggestion is appreciated