I am benchmarking a simple matrix transposition kernel on Qualcomm Adreno 630 GPU, and I am trying to see the impact of different work group size, but surprisingly, I get some interesting result which I cannot explain. Here is my kernel code:

__kernel void transpose(__global float *input, __global float *output, const int width, const int height)

int i = get_global_id(0);

int j = get_global_id(1);

output[i*height + j] = input[j*width + i];

}

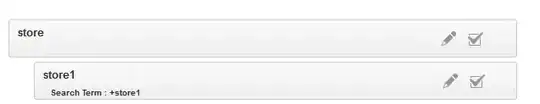

and the width and height are both 6400, the experiment results are(execution time is the difference between END and START event):

work group size execution time

x y

4 64 24ms

64 4 169ms

256 1 654ms

1 256 34ms

8 32 27ms

1 1024 375ms

1024 1 657ms

32 32 26ms

after this I did another experimemnt where I change the width and height from 6400 to 6401(and the global work size in NDRangeKernel call as well), and the result is even more interesing:

work group size execution time

x y

4 64 28ms

64 4 105ms

256 1 359ms

1 256 31ms

8 32 32ms

1 1024 99ms

1024 1 358ms

32 32 32ms

execution time of most scenarios drops significantly. I know memory coalescing or cache could play a role here, but I cannot completely explain this.