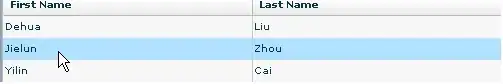

I just read through this link that explains the difference between binary cross-entropy and categorical cross-entropy, and in particular, I had a question about this picture:

The author addressed the multi-label case where your target (or ground truth) labels are one-hot encoded, but what loss function would you use if your target labels were not one-hot encoded? For example, if only half of the panda was in the image, and I then labeled the image as [1, 0, 0.5], would I still use binary-cross entropy in this case? How does the math work out for cases where the target vector is not binary?