I am training very simple inception block followed by a maxpool and fully-connected layer on NVIDIA GeForce RTX 2070 GPU and its taking very long time for an iteration. Just finished 10 iterations in more than 24 hours.

Here is the code for inception model definition

class Net(nn.Module):

def __init__(self):

super().__init__()

input_channels = 3

conv_block = BasicConv2d(input_channels, 64, kernel_size=1)

self.branch3x3stack = nn.Sequential(

BasicConv2d(input_channels, 64, kernel_size=1),

BasicConv2d(64, 96, kernel_size=3, padding=1),

BasicConv2d(96, 96, kernel_size=3, padding=1),

)

self.branch3x3 = nn.Sequential(

BasicConv2d(input_channels, 64, kernel_size=1),

BasicConv2d(64, 96, kernel_size=3, padding=1),

)

self.branch1x1 = BasicConv2d(input_channels, 96, kernel_size=1)

self.branchpool = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

BasicConv2d(input_channels, 96, kernel_size=1),

)

self.maxpool = nn.MaxPool2d(kernel_size=8, stride=8)

self.fc_seqn = nn.Sequential(nn.Linear(301056, 1))

def forward(self, x):

x = [

self.branch3x3stack(x),

self.branch3x3(x),

self.branch1x1(x),

self.branchpool(x),

]

x = torch.cat(x, 1)

x = self.maxpool(x)

x = x.view(x.size(0), -1)

x = self.fc_seqn(x)

return x # torch.cat(x, 1)

and the training code I used

def training_code(self, model):

model = copy.deepcopy(model)

model = model.to(self.device)

criterion = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=self.learning_rate)

for epoch in range(self.epochs):

print("\n epoch :", epoch)

running_loss = 0.0

start_epoch = time.time()

for i, (inputs, labels) in enumerate(self.train_data_loader):

inputs = inputs.to(self.device)

labels = labels.to(self.device)

optimizer.zero_grad()

outputs = (model(inputs)).squeeze()

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % log_step == log_step - 1:

avg_loss = running_loss / float(log_step)

print("[epoch:%d, batch:%5d] loss: %.3f" % (epoch + 1, i + 1, avg_loss))

running_loss = 0.0

if epoch % save_model_step == 0:

self.path_saved_model = path_saved_model + "_" + str(epoch)

self.save_model(model)

print("\nFinished Training\n")

self.save_model(model)

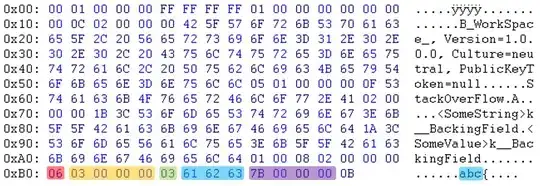

Here is the summary of the convolution neural network. Can anyone please help me how to speed up the training?

Here is the summary of the convolution neural network after reducing the number of inputs to the fully-connected (FC) layer. But still the training time is very high similar as before. Can suggestion on how to speed up the training will be helpful.