I have a Spark job running on a Dataproc cluster. How do I configure the environment to debug it on my local machine with my IDE?

1 Answers

This tutorial assumes the following:

- You know how to create GCP Dataproc clusters, either by API calls, cloud shell commands or Web UI

- You know how to submit a Spark Job

- You have permissions to launch jobs, create clusters and use Compute Engine instances

After some attempts, I've discovered how to debug on your local machine a DataProc Spark Job running on a cluster.

As you may know, you can submit a Spark Job either by using the Web UI, sending a request to the DataProc API or using the gcloud dataproc jobs submit spark command. Whichever way, you start by adding the following key-value pair to the properties field in the SparkJob: spark.driver.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=y,address=REMOTE_PORT, where REMOTE_PORT is the port on the worker where the driver will be listening.

Chances are your cluster is on a private network and you need to create a SSH tunnel to the REMOTE_PORT. If that's not the case, you're lucky and you just need to connect to the worker using the public IP and the specified REMOTE_PORT on your IDE.

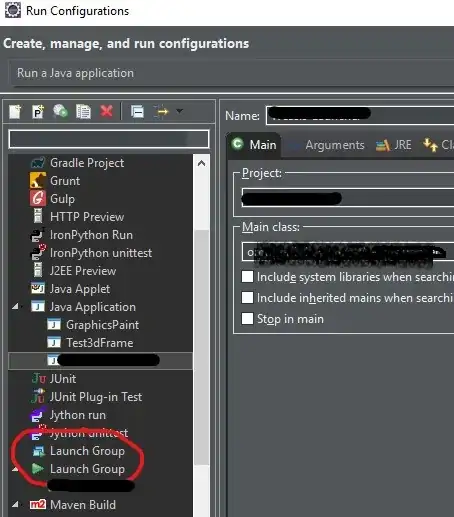

Using IntelliJ it would be like this:

where worker-ip is the worker which is listening (I've used 9094 as port this time). After a few attempts, I realized it's always the worker number 0, but you can connect to it and check whether there is a process running using netstat -tulnp | grep REMOTE_PORT

If for whatever reason your cluster does not have a public IP, you need to set a SSH tunnel from your local machine to the worker. After specifying your ZONE and PROJECT you create a tunnel to REMOTE_PORT:

gcloud compute ssh CLUSTER_NAME-w-0 --project=$PROJECT --zone=$ZONE -- -4 -N -L LOCAL_PORT:CLUSTER_NAME-w-0:REMOTE_PORT

And you set your debug configuration on your IDE pointing to host=localhost/127.0.0.1 and port=LOCAL_PORT

- 4,360

- 3

- 17

- 31

-

1I got this error when trying this method (using dataproc command line. adding argument --properties spark.driver.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=y,address=9094): ERROR: JDWP Non-server transport dt_socket must have a connection address specified through the 'address=' option – whatsnext Jul 28 '20 at 23:10

-

Had the same issue. The problem was that comma is the default delimiter for different key-value sets of properties. This behaviour can be changed by specifying different delimiter https://cloud.google.com/dataproc/docs/concepts/configuring-clusters/cluster-properties `--properties ^#^spark.driver.extraJavaOptions=-agentlib:jdwp=transport=dt_socket,server=y,suspend=y,address=13337` – Maksim Gayduk Mar 28 '22 at 09:25