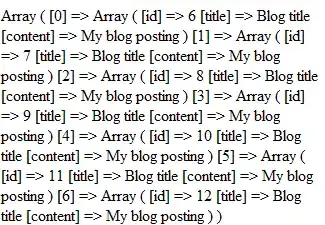

I am scraping a website table form https://csr.gov.in/companyprofile.php?year=FY+2015-16&CIN=L00000CH1990PLC010573 but I am not getting the exact result I am looking for. I want 11 columns from this link, "company name", "Class", "State", "Company Type", "RoC", "Sub Category", "Listing Status". These are 7 columns and after that you can see an expand button " CSR Details of FY 2017-18" when you will click on that button you will get 4 more columns "Average Net Profit", "CSR Prescribed Expenditure", "CSR Spent", "Local Area Spent". I want all these columns in csv file. I wrote a code and it is not working properly. I am attaching an Image of result for refference. and here is my code. please help to get these data.

from selenium import webdriver

import pandas as pd

from bs4 import BeautifulSoup

from urllib.request import urlopen

import requests

import csv

driver = webdriver.Chrome()

url_file = "csrdata.txt"

with open(url_file, "r") as url:

url_pages = url.read()

# we need to split each urls into lists to make it iterable

pages = url_pages.split("\n") # Split by lines using \n

data = []

# now we run a for loop to visit the urls one by one

for single_page in pages:

driver.get(single_page)

r = requests.get(single_page)

soup = BeautifulSoup(r.content, 'html5lib')

driver.find_element_by_link_text("CSR Details of FY 2017-18").click()

table = driver.find_elements_by_xpath("//*[contains(@id,'colfy4')]")

about = table.__getitem__(0).text

x = about.split('\n')

print(x)

data.append(x)

df = pd.DataFrame(data)

print(df)

# write to csv

df.to_csv('csr.csv')