I am trying to write an MPI application to speedup a math algorithm with a computer cluster. But before this I am doing some kind of benchmarking. But the first results are not as much as expected.

The test application has linear speedup with 4 cores but 5,6 cores are not speeding up the application. I am doing a test with Odroid N2 platform. It has 6 cores. Nproc says there are 6 cores available.

Am I missing some kind of configuration? Or is my code not prepared well enought ( it is based on one of the base example of mpi)?

Is there any response time or syncronization time which shall be considered ?

Here are some measures from my MPI based application. I measured a total calculation time for a function.

- 1 core 0.838052sec

- 2 core 0.438483sec

- 3 core 0.405501sec

- 4 core 0.416391sec

- 5 core 0.514472sec

- 6 core 0.435128sec

- 12 core (4 core from 3 N2 boards) 0.06867sec

- 18 core (6 core from 3 N2 boards) 0.152759sec

I did a benchmark with raspberry pi4 with 4 core:

- 1 core 1.51 sec

- 2 core 0.75 sec

- 3 core 0.69 sec

- 4 core 0.67 sec

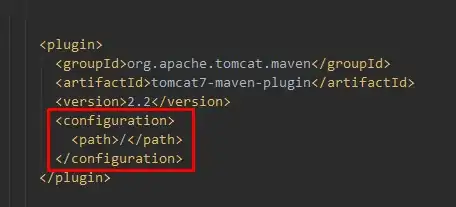

And this is my benchmark application:

int MyFun(int *array, int num_elements, int j)

{

int result_overall = 0;

for (int i = 0; i < num_elements; i++)

{

result_overall += array[i] / 1000;

}

return result_overall;

}

int compute_sum(int* sub_sums,int num_of_cpu)

{

int sum = 0;

for(int i = 0; i<num_of_cpu; i++)

{

sum += sub_sums[i];

}

return sum;

}

//measuring performance from main(): num_elements_per_proc is equal to 604800

if (world_rank == 0)

{

startTime = std::chrono::high_resolution_clock::now();

}

// Compute the sum of your subset

int sub_sum = 0;

for(int j=0;j<1000;j++)

{

sub_sum += MyFun(sub_intArray, num_elements_per_proc, world_rank);

}

MPI_Allgather(&sub_sum, 1, MPI_INT, sub_sums, 1, MPI_INT, MPI_COMM_WORLD);

int total_sum = compute_sum(sub_sums, num_of_cpu);

if (world_rank == 0)

{

elapsedTime = std::chrono::high_resolution_clock::now() - startTime;

timer = elapsedTime.count();

}

I build it with -O3 optimization level.

UPDATE: new measures:

- 60480 sample, MyFun called 100000 times: 1.47 -> 0.74 -> 0.48 -> 0.36

- 6048 samples, MyFun called 1000000 times: 1.43 -> 0.7 -> 0.47 -> 0.35

- 6048 samples, MyFun called 10000000 times: 14.43 -> 7.08 -> 4.72 -> 3.59

UPDATE2:

By the way when I list the CPU info in linux I got this:

Is this normal? The quad-core A73 core is not present. And it says there are two sockets with 3-3 cores.

And here is the CPU utilization with sar:

Seems like all of the cores are utilized.

Seems like all of the cores are utilized.

I create some plots from speedup:

Seems like calculation on float instead of int helps a bit but the core 5-6 do not help much. And I think memory bandwidth is okay. Is this a normal behavior when utilizing all CPU equally with little.BIG architecture?