Thank you for the quick answers I really appreciated it. Now I am facing another problem, I let the script run at night via nohup and I found the following in the logs:

[1] "DB PROD Connected"

[1] "DB PROD Connected"

[1] "Getting RAW data"

[1] "Maximum forecasting horizon is 52, fetching weekly data"

[1] "Removing duplicates if we have them"

[1] "Original data has 1860590 rows"

[1] "Data without duplicates has 1837995 rows"

`summarise()` regrouping output by 'A', 'B' (override with `.groups` argument)

[1] "Removing non active customers"

[1] "Data without duplicates and without active customers has 1654483 rows"

0.398 sec elapsed

[1] "Removing customers with last data older than 1.5 years"

[1] "Data without duplicates, customers that are not active and old customers has 1268610 rows"

0.223 sec elapsed

[1] "Augmenting data"

12.103 sec elapsed

[1] "Creating tsibble"

7.185 sec elapsed

[1] "Filling gaps for not breaking groups"

9.568 sec elapsed

[1] "Training theta models for forecasting horizon 52"

[1] "Using 12 sessions from as future::plan()"

Repacking large object

[1] "Training auto_arima models for forecasting horizon 52"

[1] "Using 12 sessions from as future::plan()"

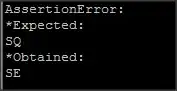

Error: target auto_arima failed.

diagnose(auto_arima)error$message:

object 'ts_models' not found

diagnose(auto_arima)error$calls:

1. └─global::trainModels(...)

In addition: Warning message:

9 errors (2 unique) encountered for theta

[3] function cannot be evaluated at initial parameters

[6] Not enough data to estimate this ETS model.

Execution halted

The object ts_models is the object being created in my training scripts and it is basically what my function trainModels return. Seems to me that maybe the input data parameter is being clean and that's the reason why it fails?

Another question for some reason my model gets not saved after training the thetha models. Is there any way to specify drake to do not jump to next model until it calculates the accuracy of one and save the .qs file?

My training function is as follows:

trainModels <- function(input_data, max_forecast_horizon, model_type, max_multisession_cores) {

options(future.globals.maxSize = 1500000000)

future::plan(multisession, workers = max_multisession_cores) #breaking infrastructure once again ;)

set.seed(666) # reproducibility

if(max_forecast_horizon <= 104) {

print(paste0("Training ", model_type, " models for forecasting horizon ", max_forecast_horizon))

print(paste0("Using ", max_multisession_cores, " sessions from as future::plan()"))

if(model_type == "prophet_multiplicative") {

ts_models <- input_data %>% model(prophet = fable.prophet::prophet(snsr_val_clean ~ season("week", 2, type = "multiplicative") +

season("month", 2, type = "multiplicative")))

} else if(model_type == "prophet_additive") {

ts_models <- input_data %>% model(prophet = fable.prophet::prophet(snsr_val_clean ~ season("week", 2, type = "additive") +

season("month", 2, type = "additive")))

} else if(model_type == "auto.arima") {

ts_models <- input_data %>% model(auto_arima = ARIMA(snsr_val_clean))

} else if(model_type == "arima_with_yearly_fourier_components") {

ts_models <- input_data %>% model(auto_arima_yf = ARIMA(snsr_val_clean ~ fourier("year", K = 2)))

} else if(model_type == "arima_with_monthly_fourier_components") {

ts_models <- input_data %>% model(auto_arima_mf = ARIMA(snsr_val_clean ~ fourier("month", K=2)))

} else if(model_type == "regression_with_arima_errors") {

ts_models <- input_data %>% model(auto_arima_mf_reg = ARIMA(snsr_val_clean ~ month + year + quarter + qday + yday + week))

} else if(model_type == "tslm") {

ts_models <- input_data %>% model(tslm_reg_all = TSLM(snsr_val_clean ~ year + quarter + month + day + qday + yday + week + trend()))

} else if(model_type == "theta") {

ts_models <- input_data %>% model(theta = THETA(snsr_val_clean ~ season()))

} else if(model_type == "ensemble") {

ts_models <- input_data %>% model(ensemble = combination_model(ARIMA(snsr_val_clean),

ARIMA(snsr_val_clean ~ fourier("month", K=2)),

fable.prophet::prophet(snsr_val_clean ~ season("week", 2, type = "multiplicative") +

season("month", 2, type = "multiplicative"),

theta = THETA(snsr_val_clean ~ season()),

tslm_reg_all = TSLM(snsr_val_clean ~ year + quarter + month + day + qday + yday + week + trend())))

)

}

}

else if(max_forecast_horizon > 104) {

print(paste0("Training ", model_type, " models for forecasting horizon ", max_forecast_horizon))

print(paste0("Using ", max_multisession_cores, " sessions from as future::plan()"))

if(model_type == "prophet_multiplicative") {

ts_models <- input_data %>% model(prophet = fable.prophet::prophet(snsr_val_clean ~ season("month", 2, type = "multiplicative") +

season("month", 2, type = "multiplicative")))

} else if(model_type == "prophet_additive") {

ts_models <- input_data %>% model(prophet = fable.prophet::prophet(snsr_val_clean ~ season("month", 2, type = "additive") +

season("year", 2, type = "additive")))

} else if(model_type == "auto.arima") {

ts_models <- input_data %>% model(auto_arima = ARIMA(snsr_val_clean))

} else if(model_type == "arima_with_yearly_fourier_components") {

ts_models <- input_data %>% model(auto_arima_yf = ARIMA(snsr_val_clean ~ fourier("year", K = 2)))

} else if(model_type == "arima_with_monthly_fourier_components") {

ts_models <- input_data %>% model(auto_arima_mf = ARIMA(snsr_val_clean ~ fourier("month", K=2)))

} else if(model_type == "regression_with_arima_errors") {

ts_models <- input_data %>% model(auto_arima_mf_reg = ARIMA(snsr_val_clean ~ month + year + quarter + qday + yday))

} else if(model_type == "tslm") {

ts_models <- input_data %>% model(tslm_reg_all = TSLM(snsr_val_clean ~ year + quarter + month + day + qday + yday + trend()))

} else if(model_type == "theta") {

ts_models <- input_data %>% model(theta = THETA(snsr_val_clean ~ season()))

} else if(model_type == "ensemble") {

ts_models <- input_data %>% model(ensemble = combination_model(ARIMA(snsr_val_clean),

ARIMA(snsr_val_clean ~ fourier("month", K=2)),

fable.prophet::prophet(snsr_val_clean ~ season("month", 2, type = "multiplicative") +

season("year", 2, type = "multiplicative"),

theta = THETA(snsr_val_clean ~ season()),

tslm_reg_all = TSLM(snsr_val_clean ~ year + quarter + month + day + qday +

yday + trend())))

)

}

}

return(ts_models)

}

BR

/E