Background:

We're trying to migrate our API Gateway from REST to gRPC. The API Gateway will consume by Backend Team with REST, and the communication from API Gateway to microservice will be using gRPC. Our API Gateway build with Tornado Python Framework, Gunicorn, and using tornado.curl_httpclient.CurlAsyncHTTPClient to enable Async / Future for each endpoint. Each endpoint will call to Microservices using Unary RPC and the gRPC stub will return Future.

So before fully migrate to gRPC, we're trying to compare gRPC vs REST performance. Here is the detail u might need to know:

- We have 3 endpoints to test.

/0,/1, and/2with a single string payload. The payload size are 100KB, 1MB, and 4MB. These message already created when the instance just started, so the endpoint only need to retrieve it. - Concurrency = 1, 4, 10 for each endpoint.

- gRPC Thread pool max workers = 1 and Gunicorn's Worker = 16.

- We're using APIB for load testing.

- All the load test is done with GCP VM Instance. The Machine spec is: Intel Broadwell, n1-standard-1 (1 vCPU, 3.75 GB memory), OS: Debian 9

- The code share the similar structure and same business logic.

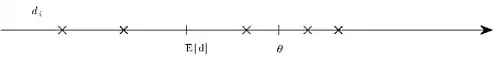

The Conclusion is The higher Concurrency and payload size, the slower gRPC become and eventually slower than REST.

Question:

- Is gRPC incapable of handling large payload size and large concurrency by using Unary Call compare to REST ?

- Are there anyway to enable gRPC to become faster than REST ?

- Are there anything fundamental cause that i missed ?

Here's a few way that i have tried:

- GZIP Compression from grpcio. The result is it's become slower than before.

- Using

GRPC_ARG_KEEPALIVE_PERMIT_WITHOUT_CALLSandGRPC_ARG_KEEPALIVE_TIMEOUT_MSoptions on the stub and server config. There's no changes in performance. - Change gRPC server max workers to

10000. Result: No Changes in performance. - Change Gunicorn Worker to

1. Result: No Changes in Performance.

The way I haven't tried:

- Using Stream RPC

Any Help is appareciated. Thank you.