I train with BERT (from huggingface) sentiment analysis which is a NLP task.

My question refers to the learning rate.

EPOCHS = 5

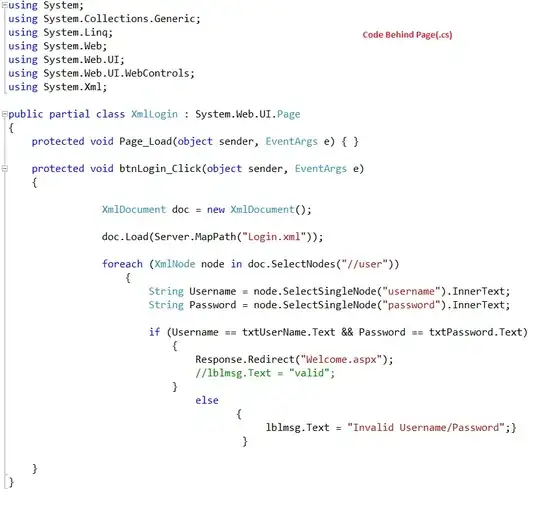

optimizer = AdamW(model.parameters(), lr=1e-3, correct_bias=True)

total_steps = len(train_data_loader) * EPOCHS

scheduler = get_linear_schedule_with_warmup(

optimizer,

num_warmup_steps=0,

num_training_steps=total_steps

)

loss_fn = nn.CrossEntropyLoss().to(device)

Can you please explain how to read 1e-3?

Is this the density of steps or is this a value to decay.

If the latter, is it a linear decay?

If I train with a value 3e-5, which is a recommended value of huggingface for NLP tasks, my model overfits very quickly: loss for training decreases to a minimum, loss for validation increases.

Learning rate 3e-5:

If I train with a value of 1e-2, I get a steady improvement in the loss value of validation. but the validation accuracy does not improve after the first epoch. See picture. Why does the validation value not increase, even though the loss falls. Isn't that a contradiction? I thought these two values were an interpretation of each other.

Learning rate 1e-2:

What would you recommend?