This sql query normally only takes a few minutes to run:

update import_parts ip

set part_manufacturer_id = pslc.part_manufacturer_id

from parts.part_supplier_line_codes pslc

where trim(lower(ip.line_code)) = trim(lower(pslc.supplier_line_code))

and (ip.status is null or ip.status != '6')

and ip.distributor_id = pslc.distributor_id

and ip.distributor_id = 196;

But I noticed it gets stuck sometimes and being auto-canceled by a 2 hour statement_timeout. I noticed a couple of times that when it gets stuck, autovacuum is running and autovacuum also takes a long time to finish running. Here's one instance where the update query and autovacuum is both running and they both takes too long to finish running:

^ In this instance, autovacuum finished running in about an hour and the update query finished running in almost 2 hours. In other instances, the update query exceeds the 2 hour statement_timeout so it gets auto-canceled.

Now my question is, is autovacuum (VACUUM) the reason why the update query is getting stuck or takes hours to finish running? If yes, what can I do to prevent the update query to be stuck or become so slow? If no, what do you think is causing the update query to be stuck or become so slow?

We're using PostgreSQL 9.6.15

UPDATE 1

I checked if our RDS instance is running out of server resources. Our instance size is db.t2.medium (2 vCPU, 4GB RAM, 1000 IOPS, storage type is provisioned iops SSD).

Here's the cloudwatch metrics for the last 3 days. Take note that in the last 3 days, the update sql query above got stuck multiple times.

UPDATE 2

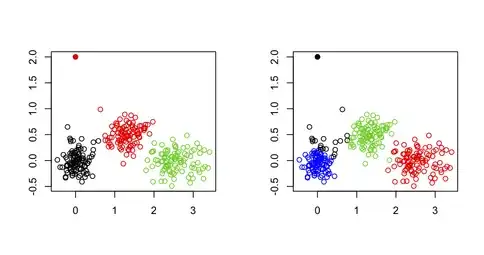

The only active locks while the update query and autovacuum was running:

^ The locks highlighted with red are the locks created by autovacuum. The locks highlighted with green are the locks created by the update query.

Here's the list of all the db connections while the update query and autovacuum was running:

The one highlighted with red is the autovacuum. The one highlighted with green is the update query.

Here's the result of EXPLAIN for the update query:

parts.part_supplier_line_codes table only contains 2758 rows. There are no 2 or more rows on the table that has the same supplier_line_code + distributor_id.

import_parts table contains 12.6 million rows.

UPDATE 3

Here's the result of EXPLAIN (ANALYZE, BUFFERS):

EXPLAIN (ANALYZE, BUFFERS)

update import_parts ip

set part_manufacturer_id = pslc.part_manufacturer_id

from parts.part_supplier_line_codes pslc

where trim(lower(ip.line_code)) = trim(lower(pslc.supplier_line_code))

and (ip.status is null or ip.status != '6')

and ip.distributor_id = pslc.distributor_id

and ip.distributor_id = 196;

Update on import_parts ip (cost=2967312.95..3778109.36 rows=31167172 width=156) (actual time=151475.198..151475.198 rows=0 loops=1)

Buffers: shared hit=62369982 read=453357 dirtied=375348 written=315748, temp read=154212 written=307558

-> Merge Join (cost=2967312.95..3778109.36 rows=31167172 width=156) (actual time=37567.148..84208.239 rows=10326988 loops=1)

Merge Cond: ((btrim(lower((pslc.supplier_line_code)::text))) = (btrim(lower((ip.line_code)::text))))

Buffers: shared hit=94397 read=78007, temp read=154212 written=307558

-> Sort (cost=51.70..52.93 rows=493 width=17) (actual time=9.649..10.039 rows=494 loops=1)

Sort Key: (btrim(lower((pslc.supplier_line_code)::text)))

Sort Method: quicksort Memory: 63kB

Buffers: shared hit=7 read=11

-> Index Scan using index_part_supplier_line_codes_on_distributor_id on part_supplier_line_codes pslc (cost=0.28..29.65 rows=493 width=17) (actual time=2.926..8.677 rows=494 loops=1)

Index Cond: (distributor_id = 196)

Buffers: shared hit=2 read=11

-> Materialize (cost=2967261.25..3030480.67 rows=12643883 width=146) (actual time=37557.491..76400.550 rows=12642995 loops=1)

Buffers: shared hit=94390 read=77996, temp read=154212 written=307558

-> Sort (cost=2967261.25..2998870.96 rows=12643883 width=146) (actual time=37557.486..68337.525 rows=12642995 loops=1)

Sort Key: (btrim(lower((ip.line_code)::text)))

Sort Method: external merge Disk: 1233688kB

Buffers: shared hit=94390 read=77996, temp read=154212 written=154212

-> Seq Scan on import_parts ip (cost=0.00..362044.24 rows=12643883 width=146) (actual time=0.027..11903.240 rows=12643918 loops=1)

Filter: (((status IS NULL) OR ((status)::text <> '6'::text)) AND (distributor_id = 196))

Buffers: shared hit=94390 read=77996

Planning time: 0.169 ms

Execution time: 151561.250 ms

UPDATE 4

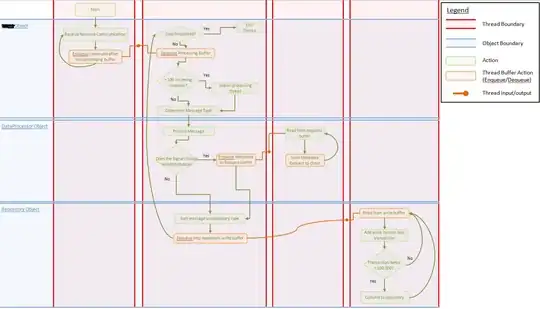

Given that our AWS RDS instance has a capacity of 1000 IOPS, looks like read iops on the screenshot below is too high? Is it possible that it's the reason why sometimes the update query is getting stuck and autovacuum is taking too long to finish running?

Read IOPS:

Read Latency:

Write Latency:

Swap Usage:

Queue Depth:

Result of EXPLAIN (ANALYZE, BUFFERS) after rebooting our AWS RDS instance:

EXPLAIN (ANALYZE, BUFFERS)

update import_parts ip

set part_manufacturer_id = pslc.part_manufacturer_id

from parts.part_supplier_line_codes pslc

where trim(lower(ip.line_code)) = trim(lower(pslc.supplier_line_code))

and (ip.status is null or ip.status != '6')

and ip.distributor_id = pslc.distributor_id

and ip.distributor_id = 196;

Update on import_parts ip (cost=3111484.57..3919788.11 rows=31071345 width=156) (actual time=180680.200..180680.200 rows=0 loops=1)

Buffers: shared hit=62263174 read=712018 dirtied=386277 written=223564, temp read=237087 written=390433

-> Merge Join (cost=3111484.57..3919788.11 rows=31071345 width=156) (actual time=64687.806..112959.396 rows=10326988 loops=1)

Merge Cond: ((btrim(lower((pslc.supplier_line_code)::text))) = (btrim(lower((ip.line_code)::text))))

Buffers: shared hit=5 read=325434, temp read=237087 written=390433

-> Sort (cost=58.61..59.85 rows=493 width=17) (actual time=4.238..5.549 rows=494 loops=1)

Sort Key: (btrim(lower((pslc.supplier_line_code)::text)))

Sort Method: quicksort Memory: 63kB

Buffers: shared hit=5 read=11

-> Bitmap Heap Scan on part_supplier_line_codes pslc (cost=7.40..36.56 rows=493 width=17) (actual time=2.582..3.242 rows=494 loops=1)

Recheck Cond: (distributor_id = 196)

Heap Blocks: exact=7

Buffers: shared read=11

-> Bitmap Index Scan on index_part_supplier_line_codes_on_distributor_id (cost=0.00..7.28 rows=493 width=0) (actual time=1.805..1.805 rows=494 loops=1)

Index Cond: (distributor_id = 196)

Buffers: shared read=4

-> Materialize (cost=3111425.95..3174450.99 rows=12605008 width=146) (actual time=64683.559..105123.024 rows=12642995 loops=1)

Buffers: shared read=325423, temp read=237087 written=390433

-> Sort (cost=3111425.95..3142938.47 rows=12605008 width=146) (actual time=64683.554..96764.494 rows=12642995 loops=1)

Sort Key: (btrim(lower((ip.line_code)::text)))

Sort Method: external merge Disk: 1233528kB

Buffers: shared read=325423, temp read=237087 written=237087

-> Seq Scan on import_parts ip (cost=0.00..514498.12 rows=12605008 width=146) (actual time=0.748..36768.509 rows=12643918 loops=1)

Filter: (((status IS NULL) OR ((status)::text <> '6'::text)) AND (distributor_id = 196))

Buffers: shared read=325423

Planning time: 23.127 ms

Execution time: 180803.124 ms

I rebooted the RDS instance to clear PostgreSQL's cache to see if there's a caching issue or not. I read somewhere that rebooting PostgreSQL will clear the db's cache.