I successfully converted TensorFlow model to TensorFlow Lite float16 model according to Post-training float16 quantization.

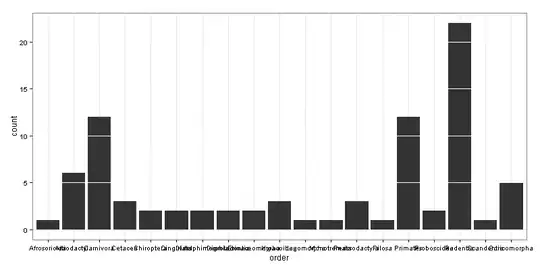

The below is a diagram of the converted model.

And I ran successfully it on MatePad Pro(Kirin 990) by my C++ code.

What I wrote especially for NNAPI is SetAllowFp16PrecisionForFp32 and UseNNAPI before AllocateTensors.

m_interpreter->SetAllowFp16PrecisionForFp32(true);

m_interpreter->UseNNAPI(true);

m_interpreter->AllocateTensors();

But the performance is not good.

I checked logs by adb logcat and found that both armnn and liteadapter, which I think as Huawei's NNAPI driver, fail to support major operations such as CONV_2D and nnapi-reference, which is CPU implementation of NNAPI, executes as fallback.

The messages are like below.

AndroidNN: AnnOpConvParser::isSupport1_1(280)::"Conv para is model Input err"

Why do NNAPI drivers except for nnapi-reference fail to support operations?

And how can I fix it?

I wonder that Dequantize operations in the converted model should not be there and each operation should have float16 parameters.

I don't know my guess is right and even though it is right, I have no idea to eliminate Dequantize operations.

(And of course, I tried float32 converted model. The outputs of float32 model were quite different between SetAllowFp16PrecisionForFp32(false) and SetAllowFp16PrecisionForFp32(true).

So I concluded that you need float16 quantization for NNAPI.)

The below is summary of observation.

Assuming setUseNNAPI(true),

- float32 model and SetAllowFp16PrecisionForFp32(true) let liteadapter work but the output is wrong.

- float32 model and SetAllowFp16PrecisionForFp32(false) let armnn work as fallback.

- float16 model and SetAllowFp16PrecisionForFp32(true or false) let nnapi-reference work as fallback.

Please give me advices!