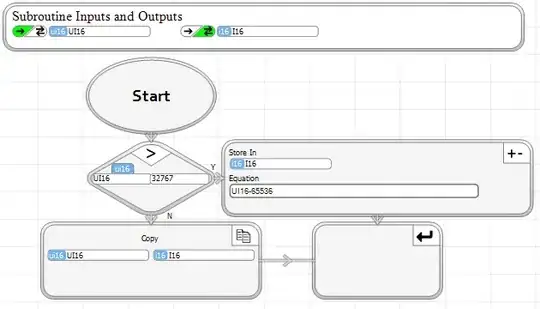

I am attempting to implement equation 16 from this paper:

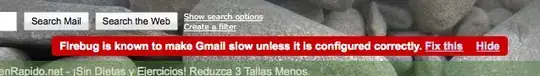

Equation 16 above is the viscous wave equation, and it is supposed to start big and die out over time, eventually approaching 0. However when I run my simulation it seems to blow up. If you look at the images below (iterations 0-4) it appears to be working correctly (i.e. the sinusoidal wave appears to be getting smaller, which is good). However, at ierations 5,6 and beyond it starts to blow up (you can see the y-axis representing pressure increasing by orders of magnitude).

Here is the output:

Here is the code that uses Finite Difference Method:

import numpy as np

import math

import matplotlib

import matplotlib.pyplot as plt

import time

x_mesh_param = 1000

# function that increments time steps (solves for the next state of p)

# returns 'p2'

# assuming no boundary conditions because we only care about what a hydrophone

# receives. Don't care about what happens to wave beyond hydrophone.

def iterate(p0,p1,x_mesh,dt,v,c0 ):

# step 3 on pg. 9

dx=x_mesh[1]-x_mesh[0]

p2 = np.zeros(p1.shape) # this is where we store next state of p

for i in range(1,len(x_mesh)-1):

p_txx = (p1[i+1]-p0[i+1]-2*(p1[i]-p0[i])+p1[i-1]-p0[i-1])/ (dt* dx**2)

p_xx = (p1[i+1]-2*p1[i]+p1[i-1]) / (dx**2)

p2[i] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+2*p1[i]-p0[i]

# what happens at p2[0] (begin) and p2[-1] (end)

# forward difference (NO BOUNDARY conditions)

p_txx = (p1[2]-p0[2]-2*(p1[1]-p0[1])+p1[0]-p0[0])/(dt*dx**2)

p_xx = (p1[2]-2*p1[1]+p1[0]) / (dx**2)

#p2[0] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+2*p1[0]-p0[0]

# taylor series

#p2[0]=1/(1-1/dt+1/(2*(dt)**2))* ((1-1/dt+1/((dt)**2))*p2[1]-p2[2]/(2*(dt**2)))

#p2[0] = p1[1] # NEUMANN

# if you comment out lines 34,37,39 you get Dirichlet Conditions

# backward difference

p_txx = (p1[-1]-p0[-1]-2*(p1[-2]-p0[-2])+p1[-3]-p0[-3])/(dt*dx**2)

p_xx = (p1[-1]-2*p1[-2]+p1[-3]) / (dx**2)

#p2[-1] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+2*p1[-1]-p0[-1]

# Dirichlet if line 46 commented out

return p2

def firstIteration(p1,x_mesh,dt,v,c0 ):

# step 3 on pg. 9

dx=x_mesh[1]-x_mesh[0]

p2 = np.zeros(p1.shape) # this is where we store next state of p

for i in range(1,len(x_mesh)-1):

p_txx = 0

p_xx = (p1[i+1]-2*p1[i]+p1[i-1]) / (dx**2)

p2[i] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+p1[i] # assuming p1[i]-p0[i]=0 (coming from assumption that d/dt (p(x,0)) =0 (initial cond. of motion of fluid)

# what happens at p2[0] (begin) and p2[-1] (end)

# forward difference (NO BOUNDARY conditions)

p_txx = 0

p_xx = (p1[2]-2*p1[1]+p1[0]) / (dx**2)

#p2[0] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+p1[0]

# taylor series

#p2[0]=1/(1-1/dt+1/(2*(dt)**2))* ((1-1/dt+1/((dt)**2))*p2[1]-p2[2]/(2*(dt**2)))

#p2[0] = p1[1] # NEUMANN

# if you comment out lines 34,37,39 you get Dirichlet Conditions

# backward difference

p_txx = 0

p_xx = (p1[-1]-2*p1[-2]+p1[-3]) / (dx**2)

#p2[-1] = (dt)**2 * (4.0/3 * v * p_txx + c0**2*p_xx)+p1[-1]

return p2

def simulate():

states = []

L=100

#dt=.02

dt=0.001

v = .001/998

c0 = 1480

x_mesh = np.linspace(0,L,x_mesh_param)

state_initial = np.array([math.sin(x/20*math.pi) for x in x_mesh])

states.append(state_initial)

states.append(firstIteration(states[0], x_mesh,dt,v,c0 ))

for i in range(50):

new_state = iterate(states[-2], states[-1], x_mesh,dt,v,c0)

states.append(new_state)

return states

if __name__=="__main__":

states = simulate()

L=100

x_mesh = np.linspace(0,L,x_mesh_param)

counter=0

for s in states:

fig, ax = plt.subplots()

ax.plot(x_mesh, s)

ax.set(xlabel='distance (m)', ylabel='pressure (atm)',

title='Pressure vs. distance, iteration = '+str(counter))

ax.grid()

plt.show()

print counter

counter = counter + 1

You will see there is a main program that calls simulate(). Then simulate() calls firstIteration() which tries to handle boundary condition. Then the rest is taken care of by iterate(). I am basically using central, forward, and backward differences to compute derivatives of wave equation.

The main program simply prints out an entire figure at different time steps.