Background

We are relatively new to TensorFlow. We are working on a DL problem involving a video dataset. Due to the volume of data involved, we decided to preprocess the videos and store the frames as jpegs in TFRecord files. We then plan to use tf.data.TFRecordDataset to feed the data to our model.

The videos have been processed into segments, each segment consisting of 16 frames, in a serialised tensor. Each frame is a 128*128 RGB image which was encoded as jpeg. Each serialised segment is stored along with some metadata as a serialised tf.train.Example in the TFRecords.

TensorFlow version: 2.1

Code

Below is the code we are using to create the tf.data.TFRecordDataset from the TFRecords. You can ignore the num and file fields.

import os

import math

import tensorflow as tf

# Corresponding changes are to be made here

# if the feature description in tf2_preprocessing.py

# is changed

feature_description = {

'segment': tf.io.FixedLenFeature([], tf.string),

'file': tf.io.FixedLenFeature([], tf.string),

'num': tf.io.FixedLenFeature([], tf.int64)

}

def build_dataset(dir_path, batch_size=16, file_buffer=500*1024*1024,

shuffle_buffer=1024, label=1):

'''Return a tf.data.Dataset based on all TFRecords in dir_path

Args:

dir_path: path to directory containing the TFRecords

batch_size: size of batch ie #training examples per element of the dataset

file_buffer: for TFRecords, size in bytes

shuffle_buffer: #examples to buffer while shuffling

label: target label for the example

'''

# glob pattern for files

file_pattern = os.path.join(dir_path, '*.tfrecord')

# stores shuffled filenames

file_ds = tf.data.Dataset.list_files(file_pattern)

# read from multiple files in parallel

ds = tf.data.TFRecordDataset(file_ds,

num_parallel_reads=tf.data.experimental.AUTOTUNE,

buffer_size=file_buffer)

# randomly draw examples from the shuffle buffer

ds = ds.shuffle(buffer_size=1024,

reshuffle_each_iteration=True)

# batch the examples

# dropping remainder for now, trouble when parsing - adding labels

ds = ds.batch(batch_size, drop_remainder=True)

# parse the records into the correct types

ds = ds.map(lambda x: _my_parser(x, label, batch_size),

num_parallel_calls=tf.data.experimental.AUTOTUNE)

ds = ds.prefetch(tf.data.experimental.AUTOTUNE)

return ds

def _my_parser(examples, label, batch_size):

'''Parses a batch of serialised tf.train.Example(s)

Args:

example: a batch serialised tf.train.Example(s)

Returns:

a tuple (segment, label)

where segment is a tensor of shape (#in_batch, #frames, h, w, #channels)

'''

# ex will be a tensor of serialised tensors

ex = tf.io.parse_example(examples, features=feature_description)

ex['segment'] = tf.map_fn(lambda x: _parse_segment(x),

ex['segment'], dtype=tf.uint8)

# ignoring filename and segment num for now

# returns a tuple (tensor1, tensor2)

# tensor1 is a batch of segments, tensor2 is the corresponding labels

return (ex['segment'], tf.fill((batch_size, 1), label))

def _parse_segment(segment):

'''Parses a segment and returns it as a tensor

A segment is a serialised tensor of a number of encoded jpegs

'''

# now a tensor of encoded jpegs

parsed = tf.io.parse_tensor(segment, out_type=tf.string)

# now a tensor of shape (#frames, h, w, #channels)

parsed = tf.map_fn(lambda y: tf.io.decode_jpeg(y), parsed, dtype=tf.uint8)

return parsed

Problem

While training our model crashed because it ran out of RAM. We investigated by running some tests and profiling the memory with memory-profiler with the --include-children flag.

All these tests were run (CPU only) by simply iterating over the dataset multiple times with the following code:

count = 0

dir_path = 'some/path'

ds = build_dataset(dir_path, file_buffer=some_value)

for itr in range(100):

print(itr)

for itx in ds:

count += 1

The total size of the subset of TFRecords we are working on now is ~ 3GB We would prefer to use TF2.1, but we can test with TF2.2 as well.

According to TF2 docs, file_buffer is in bytes.

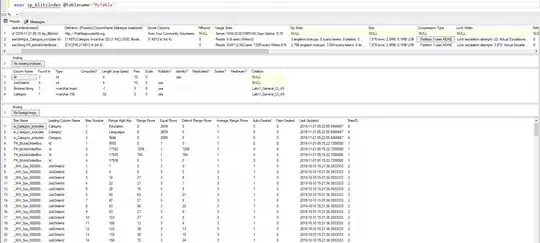

Trial 1: file_buffer = 500*1024*1024, TF2.1

Trial 2: file_buffer = 500*1024*1024, TF2.2

This one seems much better.

Trial 3 file_buffer = 1024*1024, TF2.1 We don't have the plot with us, but the RAM maxes out at ~ 4.5GB

Trial 4 file_buffer = 1024*1024, TF2.1, but prefetch set to 10

I think there is a memory leak here, as we can see the memory usage gradually builds up over time.

All trials below were run for only 50 iterations, instead of 100

Trial 5 file_buffer = 500*1024*1024, TF2.1, prefetch = 2, all other AUTOTUNE values were set to 16.

Trial 6 file_buffer = 1024*1024, rest same as above

Questions

- How is the file_buffer value affecting the memory usage, comparing Trail 1 and Trail 3, file_buffer was reduced 500 times, but memory usage only dropped by half. Is the file buffer value really in bytes?

- Trial 6's parameters seemed promising, but trying to train the model with the same failed, as it ran out of memory again.

- Is there a bug in TF2.1, why the huge difference between Trial 1 and Trial 2?

- Should we continue using AUTOTUNE or revert to constant values?

I would be happy to run more tests with different parameters. Thanks in advance!