I have Custom Built SCDF which is built as docker image in Openshift and referred in server-deployment.yaml as docker image.I use the Oracle db to store the task meta data and is an external source here. I pass the all db properties in configmap. The DB password is base64 encoded and added in config map as secret. These db details are being used by SCDF to store task metadata.

These job parameters are passed by SCDF to the executing job.But these job parameters which in turn are the datasource properties including the db password present in the configmap are being printed in logs as Job parameters, and batch_job_execution_params table.

I thought using the password as secret in configmap should resolve this. But it's not. Below is the logs and table snippet of job parameters being printed.

I would like to know how to avoid passing these db properties as job parameters to the executing job so to prevent the credentials being exposed?

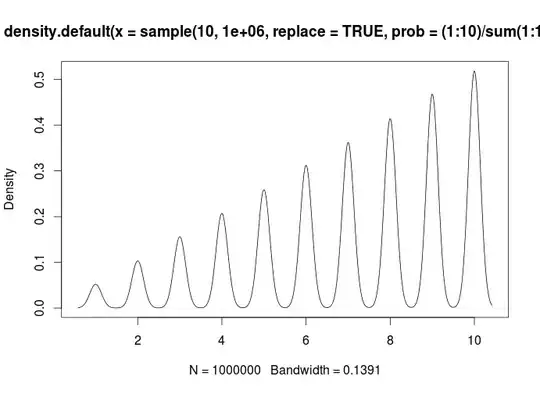

12-06-2020 18:12:38.540 [main] INFO org.springframework.batch.core.launch.support.SimpleJobLauncher.run - Job:

[FlowJob: [name=Job]] launched with the following parameters: [{

-spring.cloud.task.executionid=8010,

-spring.cloud.data.flow.platformname=default,

-spring.datasource.username=ACTUAL_USERNAME,

-spring.cloud.task.name=Alljobs,

Job.ID=1591985558466,

-spring.datasource.password=ACTUAL_PASSWORD,

-spring.datasource.driverClassName=oracle.jdbc.OracleDriver,

-spring.datasource.url=DATASOURCE_URL,

-spring.batch.job.names=Job_1}]

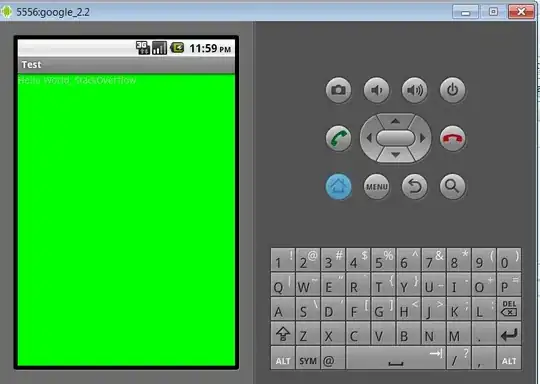

Pod Created for the Job execution - openshift screenshot

Custom SCDF Dockerfile.yaml

===========================

FROM maven:3.5.2-jdk-8-alpine AS MAVEN_BUILD

COPY pom.xml /build/

COPY src /build/src/

WORKDIR /build/

RUN mvn package

FROM openjdk:8-jre-alpine

WORKDIR /app

COPY --from=MAVEN_BUILD /build/target/BatchAdmin-0.0.1-SNAPSHOT.jar /app/

ENTRYPOINT ["java", "-jar", "BatchAdmin-0.0.1-SNAPSHOT.jar"]

Deployment.yaml

===============

apiVersion: apps/v1

kind: Deployment

metadata:

name: scdf-server

labels:

app: scdf-server

spec:

selector:

matchLabels:

app: scdf-server

replicas: 1

template:

metadata:

labels:

app: scdf-server

spec:

containers:

- name: scdf-server

image: docker-registry.default.svc:5000/batchadmin/scdf-server #DockerImage

imagePullPolicy: Always

volumeMounts:

- name: config

mountPath: /config

readOnly: true

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /management/health

port: 80

initialDelaySeconds: 45

readinessProbe:

httpGet:

path: /management/info

port: 80

initialDelaySeconds: 45

resources:

limits:

cpu: 1.0

memory: 2048Mi

requests:

cpu: 0.5

memory: 1024Mi

env:

- name: KUBERNETES_NAMESPACE

valueFrom:

fieldRef:

fieldPath: "metadata.namespace"

- name: SERVER_PORT

value: '80'

- name: SPRING_CLOUD_CONFIG_ENABLED

value: 'false'

- name: SPRING_CLOUD_DATAFLOW_FEATURES_ANALYTICS_ENABLED

value: 'true'

- name: SPRING_CLOUD_DATAFLOW_FEATURES_SCHEDULES_ENABLED

value: 'true'

- name: SPRING_CLOUD_DATAFLOW_TASK_COMPOSED_TASK_RUNNER_URI

value: 'docker://springcloud/spring-cloud-dataflow-composed-task-runner:2.6.0.BUILD-SNAPSHOT'

- name: SPRING_CLOUD_KUBERNETES_CONFIG_ENABLE_API

value: 'true'

- name: SPRING_CLOUD_KUBERNETES_SECRETS_ENABLE_API

value: 'true'

- name: SPRING_CLOUD_KUBERNETES_SECRETS_PATHS

value: /etc/secrets

- name: SPRING_CLOUD_DATAFLOW_FEATURES_TASKS_ENABLED

value: 'true'

- name: SPRING_CLOUD_KUBERNETES_CONFIG_NAME

value: scdf-server

- name: SPRING_CLOUD_DATAFLOW_SERVER_URI

value: 'http://${SCDF_SERVER_SERVICE_HOST}:${SCDF_SERVER_SERVICE_PORT}'

# Add Maven repo for metadata artifact resolution for all stream apps

- name: SPRING_APPLICATION_JSON

value: "{ \"maven\": { \"local-repository\": null, \"remote-repositories\": { \"repo1\": { \"url\": \"https://repo.spring.io/libs-snapshot\"} } } }"

serviceAccountName: scdf-sa

volumes:

- name: config

configMap:

name: scdf-server

items:

- key: application.yaml

path: application.yaml

#- name: SPRING_CLOUD_DATAFLOW_FEATURES_TASKS_ENABLED

#value : 'true'

server-config.yaml

==================

apiVersion: v1

kind: ConfigMap

metadata:

name: scdf-server

labels:

app: scdf-server

data:

application.yaml: |-

spring:

cloud:

dataflow:

task:

platform:

kubernetes:

accounts:

default:

limits:

memory: 1024Mi

cpu: 2

entry-point-style: exec

image-pull-policy: always

datasource:

url: jdbc:oracle:thin:@db_url

username: BATCH_APP

password: ${oracle-root-password}

driver-class-name: oracle.jdbc.OracleDriver

testOnBorrow: true

validationQuery: "SELECT 1"

flyway:

enabled: false

jpa:

hibernate:

use-new-id-generator-mappings: true

oracle-secrets.yaml

===================

apiVersion: v1

kind: Secret

metadata:

name: oracle

labels:

app: oracle

data:

oracle-root-password: a2xldT3ederhgyzFCajE4YQ==

Any help would be much appreciated. Thanks.