I wrote the following code to create a random matrix Sigma with specified eigenvalues, and then sample vectors from multivariate normal distribution with zero mean and covariance Sigma.

def generate_data(N, d):

eigenvalues = [0] * (d + 1)

for k in range(1, d + 2):

eigenvalues[k - 1] = k

random_matrix = numpy.random.randn(d + 1, d + 1)

random_orthogonal = numpy.linalg.qr(random_matrix)[0]

sqrt_cov = random_orthogonal @ numpy.diag(numpy.sqrt(eigenvalues))

X = numpy.zeros((N, d + 1))

for i in range(N):

vec = numpy.random.standard_normal(d + 1)

X[i] = sqrt_cov @ vec

After this code, X should be N by d+1 matrix that's been sampled from the desired distribution.

Now I want to know what are the eigenvalues of the sample covariance matrix of X. If I am not mistaken, it should be similar to Sigma

def get_sample_covariance(data):

data = data - data.mean(axis=0)

sample_cov = data.T @ data / (data.shape[0] - 1)

return sample_cov

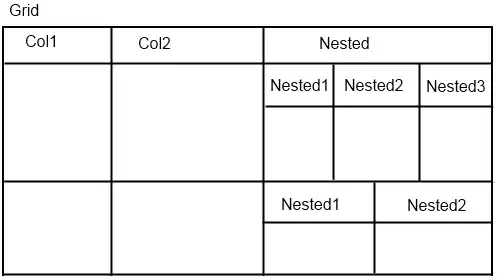

I then plotted the eigenvalues of sample_cov

I expected a roughly linear function, going from d (which was 500) to 1. I got this

What gives? Where's the mistake?