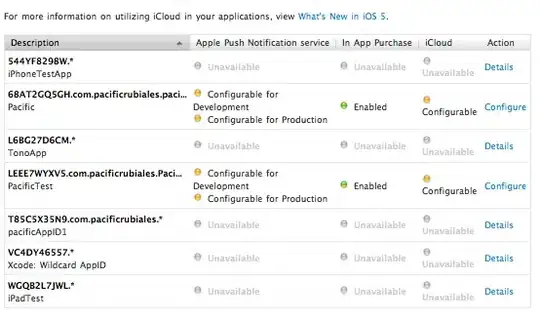

I'm working with GANs on the Single Image Super-Resolution (SISR) problem at 4x scaling. I am using residual learning techniques, so what I get back from the trained network is a tensor containing the estimated residual image between the upscaled input image and the target image. I feed the network with normalized numpy arrays representing the images (np.asarray(image) / 255).

In order to get the final estimate image, then, I have to sum the upscaled input image with the residual image. Here is the code I use (input image's size is 64x64 while the output has size 256x256):

net.eval()

img = Image.open(image_folder + 'lr/' + image_name)

tens = transforms.ToTensor()

toimg = transforms.ToPILImage()

input = tens(img)

bicub_res = tens(img.resize((img.size[0] * 4, img.size[1] * 4), Image.BICUBIC))

input = input.view((1, 3, 64, 64))

output = net(input)

output = torch.add(bicub_res, output).clamp(0, 255)

output = output.view((3, 256, 256))

output = toimg(output)

Now, having these images as low resolution, high resolution and residuals (network output):

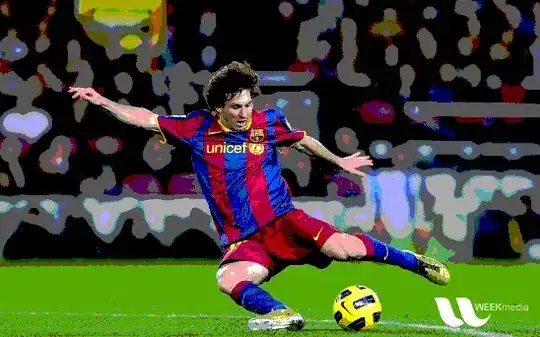

if I sum the low resolution image with the residual image as shown in the code, what I get is:

that seems a bit too dark. Now, given that the data structure are numpy arrays, I've tried to stretch back the values of the array to the range (0, 255) and then convert it back to an image. In this case, I get this:

which is a bit brighter than before, but still very dark. What am I doing wrong? How can I get my image back?

EDIT: I will answer my question: the problem was a constant factor per each layer that I forgot to add.

Nonetheless, I have another question to ask: after recovering the right images, I noticed some kind of noise on each image:

and looking at other images, like the baby, I noticed that it is a repetition of 9 times (on a 3x3 grid) of some kind of "watermark self image". This pattern is the same for every picture, no matter what I do or how I train the network. Why do I see this artifacts?