I have a same query running on two different DB servers with almost identical config. Query is doing Full Table scan(FTS) on one table

SELECT COUNT (1) FROM tax_proposal_dtl WHERE tax_proposal_no = :b1 AND taxid != :b2 AND INSTR(:b3 , ',' || STATUS || ',' ) > 0 ;

While on 1st DB I get result in less than 3 secs with 0 disk read while on 2nd DB disk read is high and elapsed time is approx 9 secs

Only difference between the table config on two DBs is that on 1st Table has Cache = 'Y' while on 2nd Cache = 'N'. As per my understanding is that in case of FTS cache wont be used and direct path read will be used. so, why is the performance of same query is impacted by cache/nocache(Because that is the only difference between two envs and even the execution plan is same).

As suggested by Jon and after doing further research on this topic(Specially with regards to _SMALL_TABLE_THRESHOLD), I am adding more details.

Current version: Oracle Database 12c Enterprise Edition Release 12.2.0.1.0 - 64bit

Details of 2nd DB:

Total block count of table from DBA_SEGMENTS = 196736

Details of 1st DB:

Total block count of table from DBA_SEGMENTS = 172288

Execution plan on both the DBs are same but there are two major differences : a) On 2nd DB cache option is false on the table(I tried alter table cache but still no impact on performance)

b) On 2nd DB because _STT parameter is 23920 so as per 5*_STT rule table will not be qualified as medium sized table while on 1st DB _STT parameter is 48496 so as per 5*_STT rue table will be qualified as medium sized table.

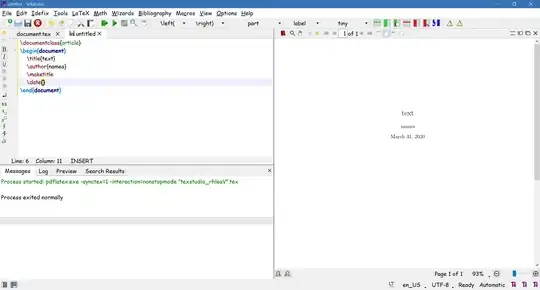

Below is a chart based on my research till now on _STT an Cache parameter of how system will behave for different table size.

Please let me know if my understanding is correct in assuming that Cache option will have no impact on Medium or Large sized table but it will help in retaining small sized table longer in LRU. So based on above assumptions and chart presented I am concluding that in the case of 2nd DB Table is classified as Large sized table and hence DPR and more elapsed time while in the case of 1st it is classified as medium sized table and hence cache read and less elapsed time.

As per this link I have set the _STT parameter on session on 2nd DB

alter session set "_small_table_threshold"=300000;

So, performance has improved considerably and almost same as 1st DB with 0 disk reads, as this implies that table will be considered Small sized.

I have used following articles in my research.

https://jonathanlewis.wordpress.com/2011/03/24/small-tables/

https://dioncho.wordpress.com/tag/full-table-scan/

https://mikesmithers.wordpress.com/2016/06/23/oracle-pinning-table-data-in-the-buffer-cache/

http://afatkulin.blogspot.com/2012/07/serial-direct-path-reads-in-11gr2-and.html

http://afatkulin.blogspot.com/2009/01/11g-adaptive-direct-path-reads-what-is.html