Considering I have search pannel that inculude multiple options like in the picture below:

I'm working with mongo and create compound index on 3-4 properties with specific order. But when i run a different combinations of searches i see every time different order in execution plan (explain()). Sometime i see it on Collection scan (bad) , and sometime it fit right to the index (IXSCAN).

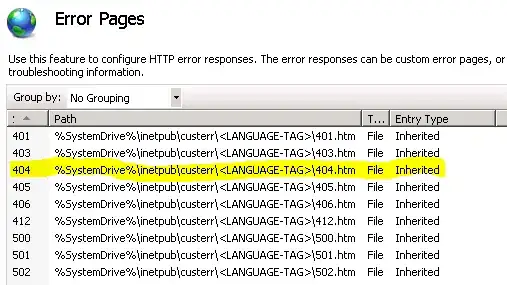

The selective fields that should handle by mongo indexes are:(brand,Types,Status,Warehouse,Carries ,Search - only by id)

My question is:

Do I have to create all combination with all fields with different order , it can be 10-20 compound indexes. Or 1-3 big Compound Index , but again it will not solve the order.

What is the best strategy to deal with big various of fields combinations.

I use same structure queries with different combinations of pairs

// Example Query.

// fields could be different every time according to user select (and order) !!

db.getCollection("orders").find({

'$and': [

{

'status': {

'$in': [

'XXX',

'YYY'

]

}

},

{

'searchId': {

'$in': [

'3859447'

]

}

},

{

'origin.brand': {

'$in': [

'aaaa',

'bbbb',

'cccc',

'ddd',

'eee',

'bundle'

]

}

},

{

'$or': [

{

'origin.carries': 'YYY'

},

{

'origin.carries': 'ZZZ'

},

{

'origin.carries': 'WWWW'

}

]

}

]

}).sort({"timestamp":1})

// My compound index is:

{status:1 ,searchId:-1,origin.brand:1, origin.carries:1 , timestamp:1}

but it only 1 combination ...it could be plenty like

a. {status:1} {b.status:1 ,searchId:-1} {c. status:1 ,searchId:-1,origin.brand:1} {d.status:1 ,searchId:-1,origin.brand:1, origin.carries:1} ........

Additionally , What will happened with Performance write/read ? , I think write will decreased over reads ...

The queries pattern are :

1.find(...) with '$and'/'$or' + sort

2.Aggregation with Match/sort

thanks