I used UNET to segment cracks on the road, and used YOLO to detect them. images were processed through the proposed YOLO and U-net models individually and the detected objects in the proposed yolo model image should be masked on the corresponding images processed by the U-net model to find the ration of white/black pixels in the bounding boxes. How can I do this masking? Thanks in advance!

Asked

Active

Viewed 1,487 times

1

-

if you didnt change the image sizes, just use the yolo box positions on your UNet output image?!? – Micka Jun 07 '20 at 20:28

-

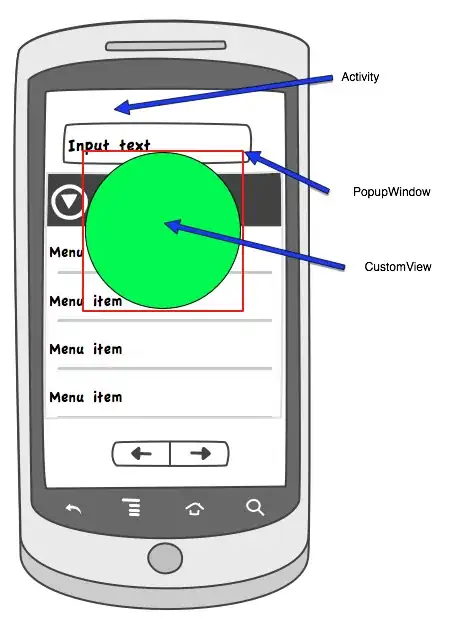

1YOLO outputs the corners of the rectangle, so you should be able to use these to crop the image. See the `YOLO Object Detection with OpenCV` section [here](https://www.pyimagesearch.com/2018/11/12/yolo-object-detection-with-opencv/). – Barak Itkin Jun 07 '20 at 20:32

-

@Barak Itkin So,as the first step I need to crop rectangles from the UNET outputs with the same coordinates as the YOLO output bounding boxes. what is the next step? How can I measure the number of white pixels in each bonding box? – Max Jun 07 '20 at 20:53

-

@Micka No I didnt change the image size – Max Jun 07 '20 at 20:54

-

1if pixels are in the full range of 0 to 255 you have to find a threshold first. Afterwards you can use cv2.countNonZero to get the number of pixels which are not zero. – Micka Jun 07 '20 at 21:01