I am trying to create my first pipleine in dataflow, I have the same code runnign when i execute using the interactive beam runner but on dataflow I get all sort of errors, which are not making much sense to me.

{"timestamp":1589992571906,"lastPageVisited":"https://kickassdataprojects.com/simple-and-complete-tutorial-on-simple-linear-regression/","pageUrl":"https://kickassdataprojects.com/","pageTitle":"Helping%20companies%20and%20developers%20create%20awesome%20data%20projects%20%7C%20Data%20Engineering/%20Data%20Science%20Blog","eventType":"Pageview","landingPage":0,"referrer":"direct","uiud":"31af5f22-4cc4-48e0-9478-49787dd5a19f","sessionId":322371}

Here is my code

from __future__ import absolute_import

import apache_beam as beam

#from apache_beam.runners.interactive import interactive_runner

#import apache_beam.runners.interactive.interactive_beam as ib

import google.auth

from datetime import timedelta

import json

from datetime import datetime

from apache_beam import window

from apache_beam.transforms.trigger import AfterWatermark, AfterProcessingTime, AccumulationMode, AfterCount

from apache_beam.options.pipeline_options import GoogleCloudOptions

from apache_beam.options.pipeline_options import PipelineOptions

from apache_beam.options.pipeline_options import SetupOptions

from apache_beam.options.pipeline_options import StandardOptions

import argparse

import logging

from time import mktime

def setTimestamp(elem):

from apache_beam import window

return window.TimestampedValue(elem, elem['timestamp'])

def createTuples(elem):

return (elem["sessionId"], elem)

def checkOutput(elem):

print(elem)

return elem

class WriteToBigQuery(beam.PTransform):

"""Generate, format, and write BigQuery table row information."""

def __init__(self, table_name, dataset, schema, project):

"""Initializes the transform.

Args:

table_name: Name of the BigQuery table to use.

dataset: Name of the dataset to use.

schema: Dictionary in the format {'column_name': 'bigquery_type'}

project: Name of the Cloud project containing BigQuery table.

"""

# TODO(BEAM-6158): Revert the workaround once we can pickle super() on py3.

#super(WriteToBigQuery, self).__init__()

beam.PTransform.__init__(self)

self.table_name = table_name

self.dataset = dataset

self.schema = schema

self.project = project

def get_schema(self):

"""Build the output table schema."""

return ', '.join('%s:%s' % (col, self.schema[col]) for col in self.schema)

def expand(self, pcoll):

return (

pcoll

| 'ConvertToRow' >>

beam.Map(lambda elem: {col: elem[col]

for col in self.schema})

| beam.io.WriteToBigQuery(

self.table_name, self.dataset, self.project, self.get_schema()))

class ParseSessionEventFn(beam.DoFn):

"""Parses the raw game event info into a Python dictionary.

Each event line has the following format:

username,teamname,score,timestamp_in_ms,readable_time

e.g.:

user2_AsparagusPig,AsparagusPig,10,1445230923951,2015-11-02 09:09:28.224

The human-readable time string is not used here.

"""

def __init__(self):

# TODO(BEAM-6158): Revert the workaround once we can pickle super() on py3.

#super(ParseSessionEventFn, self).__init__()

beam.DoFn.__init__(self)

def process(self, elem):

#timestamp = mktime(datetime.strptime(elem["timestamp"], "%Y-%m-%d %H:%M:%S").utctimetuple())

elem['sessionId'] = int(elem['sessionId'])

elem['landingPage'] = int(elem['landingPage'])

yield elem

class AnalyzeSessions(beam.DoFn):

def __init__(self):

# TODO(BEAM-6158): Revert the workaround once we can pickle super() on py3.

#super(AnalyzeSessions, self).__init__()

from apache_beam import window

beam.DoFn.__init__(self)

def process(self, elem, window=beam.DoFn.WindowParam):

from apache_beam import window

sessionId = elem[0]

uiud = elem[1][0]["uiud"]

count_of_events = 0

pageUrl = []

window_end = window.end.to_utc_datetime()

window_start = window.start.to_utc_datetime()

session_duration = window_end - window_start

for rows in elem[1]:

if rows["landingPage"] == 1:

referrer = rows["refererr"]

pageUrl.append(rows["pageUrl"])

print({

"pageUrl":pageUrl,

"eventType":"pageview",

"uiud":uiud,

"sessionId":sessionId,

"session_duration": session_duration,

"window_start" : window_start

})

yield {

'pageUrl':pageUrl,

'eventType':"pageview",

'uiud':uiud,

'sessionId':sessionId,

'session_duration': session_duration,

'window_start' : window_start,

}

def run(argv=None, save_main_session=True):

parser = argparse.ArgumentParser()

parser.add_argument('--topic', type=str, help='Pub/Sub topic to read from')

parser.add_argument(

'--subscription', type=str, help='Pub/Sub subscription to read from')

parser.add_argument(

'--dataset',

type=str,

required=True,

help='BigQuery Dataset to write tables to. '

'Must already exist.')

parser.add_argument(

'--table_name',

type=str,

default='game_stats',

help='The BigQuery table name. Should not already exist.')

parser.add_argument(

'--fixed_window_duration',

type=int,

default=60,

help='Numeric value of fixed window duration for user '

'analysis, in minutes')

parser.add_argument(

'--session_gap',

type=int,

default=5,

help='Numeric value of gap between user sessions, '

'in minutes')

parser.add_argument(

'--user_activity_window_duration',

type=int,

default=30,

help='Numeric value of fixed window for finding mean of '

'user session duration, in minutes')

args, pipeline_args = parser.parse_known_args(argv)

session_gap = args.session_gap * 60

options = PipelineOptions(pipeline_args)

# Set the pipeline mode to stream the data from Pub/Sub.

options.view_as(StandardOptions).streaming = True

options.view_as( StandardOptions).runner= 'DataflowRunner'

options.view_as(SetupOptions).save_main_session = save_main_session

p = beam.Pipeline(options=options)

lines = (p

| beam.io.ReadFromPubSub(

subscription="projects/phrasal-bond-274216/subscriptions/rrrr")

| 'decode' >> beam.Map(lambda x: x.decode('utf-8'))

| beam.Map(lambda x: json.loads(x))

| beam.ParDo(ParseSessionEventFn())

)

next = ( lines

| 'AddEventTimestamps' >> beam.Map(setTimestamp)

| 'Create Tuples' >> beam.Map(createTuples)

| 'Window' >> beam.WindowInto(window.Sessions(15))

| 'group by key' >> beam.GroupByKey()

| 'analyze sessions' >> beam.ParDo(AnalyzeSessions())

| beam.Map(print)

| 'WriteTeamScoreSums' >> WriteToBigQuery(

args.table_name, args.dataset,

{

"uiud":'STRING',

"session_duration": 'INTEGER',

"window_start" : 'TIMESTAMP'

},

options.view_as(GoogleCloudOptions).project)

)

result = p.run()

# result.wait_till_termination()

if __name__ == '__main__':

logging.getLogger().setLevel(logging.INFO)

run()

The problem I am facing is with the AnalyzeSessions Pardo function it doesn't produce any output or any error. I have tried this code in interactive beam runner and it has worked.

Any ideas why the pipeline is not working?

All the other steps including analyze session step have an input

Here is the output section.

The steps after this do not work, I don't see anything in the logs either, not event the print statements add something there.

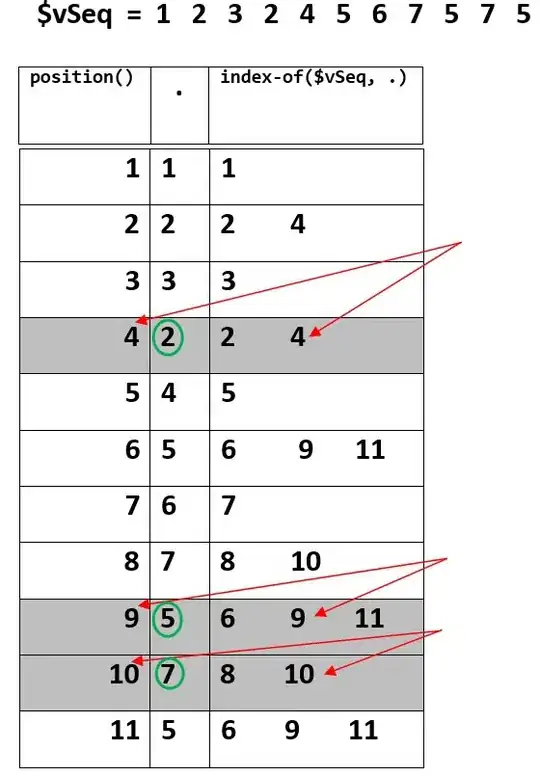

EDIT: IF it helps here is how my data is going into the WindoInto and GroupBy key step.