Hi everybody i have developed two RNN models for a chatbot.Let's say that user says:"Tell me how the weather will be tomorrow in Paris". The first model will be able to recognize the user's intent WEATHER_INFO , while the second one will be able to extract meaningful information from the phrase like LOC:Paris and DATE:tomorrow Of course there are many other intent categories like for example MUSIC_PLAY and so on. Since the two model are not linked in any way for the same phrase we could have for example the results intent:WEATHER_INFO and TITLE:Paris , where the slot TITLE is instead linked to the MUSIC_PLAY intention Many researchers tried to improve performance creating a joint model where the two models use the other one in order to avoid this kind of error here my code for the slot filling nn

from keras.models import Model, Input

from keras.layers import LSTM, Embedding, Dense, TimeDistributed, Dropout, Bidirectional

import keras as k

from keras_contrib.layers import CRF

# input = Input(shape=(140,))

# input = Input(shape=(len(X_train),max_len))

input = Input(shape=(max_len,))

# word_embedding_size = 150

word_embedding_size = 150

n_words = len(token_ids)

# Embedding Layer

model = Embedding(input_dim=n_words, output_dim=word_embedding_size, input_length=max_len)(input)

# model = Embedding(input_dim=n_words, output_dim=word_embedding_size, input_length=140)(input)

# BI-LSTM Layer

model = Bidirectional(LSTM(units=word_embedding_size,

return_sequences=True,

dropout=0.5,

recurrent_dropout=0.5,

kernel_initializer=k.initializers.he_normal()))(model)

model = LSTM(units=word_embedding_size * 2,

return_sequences=True,

dropout=0.5,

recurrent_dropout=0.5,

kernel_initializer=k.initializers.he_normal())(model)

# TimeDistributed Layer

model = TimeDistributed(Dense(n_tags, activation="relu"))(model)

# CRF Layer

crf = CRF(n_tags)

out = crf(model) # output

model = Model(input, out)

# In[]

# model compile and fit

from keras.callbacks import ModelCheckpoint

import matplotlib.pyplot as plt

# Optimiser

adam = k.optimizers.Adam(lr=0.0005, beta_1=0.9, beta_2=0.999)

# Compile model

model.compile(optimizer=adam, loss=crf.loss_function, metrics=[crf.accuracy, 'accuracy'])

model.summary()

# Saving the best model only

filepath = "ner-bi-lstm-td-model-{val_accuracy:.2f}.hdf5"

checkpoint = ModelCheckpoint(filepath, monitor='val_accuracy', verbose=1, save_best_only=True, mode='max')

callbacks_list = [checkpoint]

# Fit the best model

history = model.fit(X_train, y_train, batch_size=256, epochs=10, validation_split=0.1, verbose=1,

callbacks=callbacks_list)

and here the code for the intent detection NN

#CNN architecture

from __future__ import print_function

import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras import backend as K

from keras import layers

batch_size = 128

epochs = 12

if nn_architecture == 'CNN':

model_CNN = Sequential()

e = Embedding(vocab_size, 300, weights=[embedding_matrix], input_length=max_length, trainable=False)

model_CNN.add(e)

model_CNN.add(Dropout(0.2))

# we add a Convolution1D, which will learn filters

# word group filters of size filter_length:

filters = 50

kernel_size = 3

hidden_dims = 250

model_CNN.add(layers.Conv1D(filters,

kernel_size,

padding='valid',

activation='relu',

strides=1))

# we use max pooling:

model_CNN.add(layers.GlobalMaxPooling1D())

# We add a vanilla hidden layer:

model_CNN.add(Dense(hidden_dims))

model_CNN.add(Dropout(0.2))

model_CNN.add(layers.Activation('relu'))

# We project onto a single unit output layer, and squash it with a sigmoid:

model_CNN.add(Dense(nbClasses)) # no_of_categories

model_CNN.add(layers.Activation('sigmoid'))

model_CNN.compile(loss='binary_crossentropy',

optimizer='adam',

metrics=['accuracy'])

history_CNN =model_CNN.fit(X_train, Y_train_c,

batch_size=batch_size,

epochs=epochs,

#validation_split=0.2

)

# Epoch 12/12

# 38771/38771 [==============================] - 11s 276us/step -

#loss: 0.0046 - accuracy: 0.9985

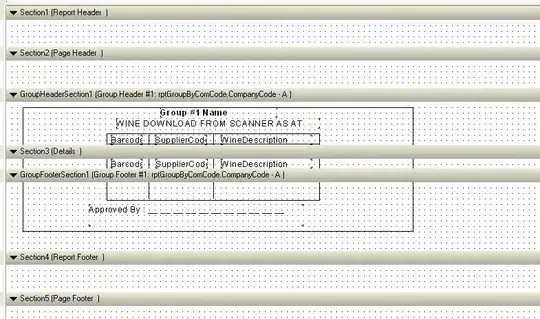

What I would like to is to merge this two architectures in order to obtain this

Please help me ... thanks in advance