Two questions

- Visualizing the error of a model

- Calculating the log loss

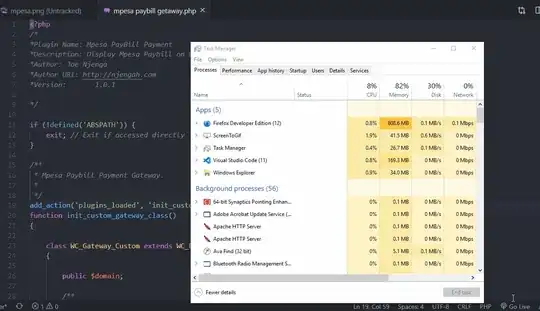

(1) I'm trying to tune a multinomial GBM classifier, but I'm not sure how to adapt to the outputs. I understand that LogLoss is meant to be minimized, but in the below plot, for any range of iterations or trees, it only appears to increase.

inTraining <- createDataPartition(final_data$label, p = 0.80, list = FALSE)

training <- final_data[inTraining,]

testing <- final_data[-inTraining,]

fitControl <- trainControl(method = "repeatedcv", number=10, repeats=3, verboseIter = FALSE, savePredictions = TRUE, classProbs = TRUE, summaryFunction= mnLogLoss)

gbmGrid1 <- expand.grid(.interaction.depth = (1:5)*2, .n.trees = (1:10)*25, .shrinkage = 0.1, .n.minobsinnode = 10)

gbmFit1 <- train(label~., data = training, method = "gbm", trControl=fitControl,

verbose = 1, metric = "ROC", tuneGrid = gbmGrid1)

plot(gbmFit1)

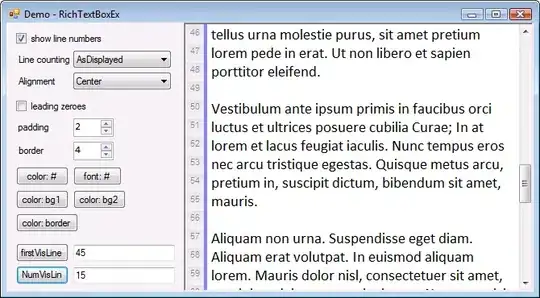

-- (2) on a related note, when I try to directly investigate mnLogLoss I get this error, which keeps me from trying to quantify the error.

mnLogLoss(testing, levels(testing$label)) : 'lev' cannot be NULL