I am using the following function to send some logging standard output from Databricks to Azure application insights logs.

my function

import logging

from opencensus.ext.azure.log_exporter import AzureLogHandler

from opencensus.trace import config_integration

from opencensus.trace.samplers import AlwaysOnSampler

from opencensus.trace.tracer import Tracer

def custom_logging_function(log_type, instrumentation_key_value, input_x):

"""

Purpose: The standard output sent to Application insights logs

Inputs: -

Return: -

"""

config_integration.trace_integrations(['logging'])

logging.basicConfig(format='%(asctime)s traceId=%(traceId)s spanId=%(spanId)s %(message)s')

tracer=Tracer(sampler=AlwaysOnSampler())

logger=logging.getLogger(__name__)

logger.addHandler(AzureLogHandler(connection_string='InstrumentationKey={0}'.format(instrumentation_key_value)))

if log_type=="INFO" or log_type=="SUCESSFULL":

#[UPDATE]

logger.setLevel(logging.INFO)

logger.info(input_x)

#logging.info(input_x)

elif log_type=="ERROR":

#[UPDATE]

logger.setLevel(logging.ERROR)

logger.exception(input_x)

#logging.exception(input_x)

else:

logger.warning(input_x)

[UPDATE] By setting the logging level to INFO, ERROR you can log different types of traces.

This function even though it is correctly executed it's faulty for the following two reasons:

Reason 1

When I want to print a logger.info() message it's not logged successfully in Application insights. For an non-explainable reason only the logger.warning() messages are successfully sent to Application insights logs.

For example,

custom_logging_function("INFO", instrumentation_key_value, "INFO: {0} chronical dates in the specified time-frame have been created!".format(len(date_list)))

# Uses the logger.info() based on my function!

This is never logged. But rather the following only it's logged,

custom_logging_function("WARNING", instrumentation_key_value, "INFO: {0} chronical dates in the specified time-frame have been created!".format(len(date_list)))

# Uses the logger.warning() based on my function!

The reason 1 has been solved by me..please check my function edit

------------------------------------------------------------------------

Reason 2

The same message is logged multiple times, instead only once.

Some code to interpret the same problem,

# Set keyword parameters

time_scale=12

time_frame_repetition=1

timestamp_snapshot=datetime.utcnow()

round_up = math.ceil(time_frame_repetition*365/time_scale)

day_list = [(timestamp_snapshot - timedelta(days=x)).strftime("%d") for x in range(round_up)]

month_list = [(timestamp_snapshot - timedelta(days=x)).strftime("%m") for x in range(round_up)]

year_list = [(timestamp_snapshot - timedelta(days=x)).strftime("%Y") for x in range(round_up)]

date_list=[[day_list[i], month_list[i], year_list[i]] for i in range(0, len(day_list))]

custom_logging_function("INFO", instrumentation_key_value, "INFO: {0} chronical dates in the specified time-frame have been created!".format(len(date_list))) #the function already written in the start of my post.

The output of the above code snippet is logged more than 1 time(s) in Application insights and I am trying to figure out why.

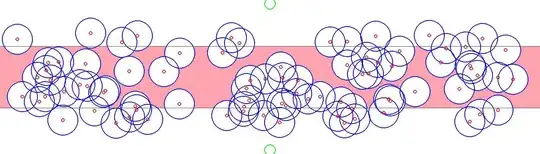

Output log in Application insights

As you can see from the output of the query the same row is logged multiple times.

What are your suggestions on the second matter since the first one was solved.

[UPDATE] based on the answer provided below by @Izchen

def instantiate_logger(instrumentation_key_value):

config_integration.trace_integrations(['logging'])

logging.basicConfig(format='%(asctime)s traceId=%(traceId)s spanId=%(spanId)s %(message)s')

tracer=Tracer(sampler=AlwaysOnSampler())

logger=logging.getLogger(__name__)

logger.addHandler(AzureLogHandler(connection_string='InstrumentationKey={0}'.format(instrumentation_key_value)))

return logger

logging_instance=instantiate_logger(instrumentation_key_value)

def custom_logging_function(logging_instance, disable_logging, log_type, input_x, *arguments):

"""

Purpose: The standard output sent to Application insights logs

Inputs: -

Return: The logger object.

"""

if disable_logging==0:

if log_type=="INFO" or log_type=="SUCCESSFUL":

logging_instance.setLevel(logging.INFO)

logging_instance.info(input_x)

print(input_x, *arguments)

elif log_type=="ERROR":

logging_instance.setLevel(logging.ERROR)

logging_instance.exception(input_x)

print(input_x, *arguments)

else:

logging_instance.warning(input_x)

print(input_x, *arguments)

else:

print(input_x, *arguments)

Still the code above logs the output of this function:

date_list=merge_hierarchy_list(year_list, month_list, day_list, None, None)

custom_logging_function(logging_instance, disable_logging_value, "INFO", "INFO: {0} chronological dates in the specified time-frame have been created!".format(len(date_list)))

Output (logged 2 times in Application Insights Log traces):

"INFO: 31 chronological dates in the specified time-frame have been created!"